Effective Error Handling Strategies in Automated Tests

|

|

Let’s say you’ve chosen your test automation tool, done your homework, and managed to write test scripts. Everything is going according to plan so far. But when you run those ‘perfectly crafted tests’, you encounter issues that have got nothing to do with the issues in the application under test but are rather your own doing because of the way you wrote your test scripts.

Errors in automated tests are quite common and can be avoided, too. This post will help you figure out where you are going wrong and how to overcome them.

What are errors in automated tests?

Errors in automated tests are mistakes in the test script itself. These can be programming errors (syntax mistakes, typos), incorrect locators (referencing non-existent UI elements), or issues with test data management. These errors prevent the test script from running as intended. They might cause the test to fail, hang indefinitely, or produce unreliable results.

Types of errors in automated tests

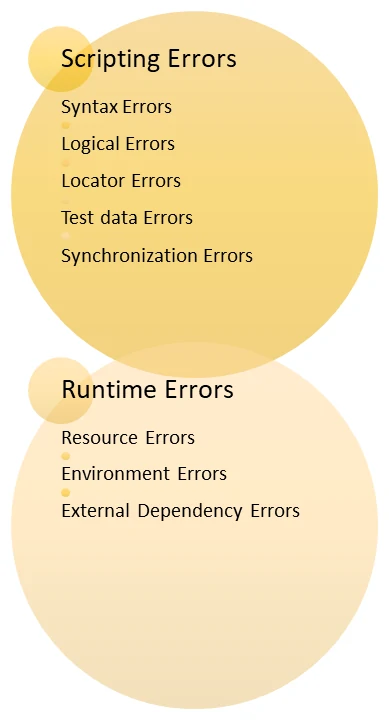

There are two main categories of errors in automated tests:

Script errors

These errors originate from mistakes within the test script itself and don’t necessarily reflect issues with the application under test. They can cause test failures but shouldn’t be confused with bugs. Here are some common types of script errors:

- Syntax errors: Typos, missing semicolons, or incorrect use of keywords in your programming language.

- Logical errors: Flaws in the test logic, like using an incorrect comparison operator or making assumptions about program flow. Read: UnknownMethodException in Selenium: How to Handle?

- Locator errors: Incorrect identifiers used to locate UI elements. This could be referencing an element that doesn’t exist, has changed its properties, or is not unique enough.

- Test data errors: Using invalid, missing, or inappropriate test data that leads to test failures.

- Synchronization errors: Not properly waiting for elements to load or actions to complete before interacting with them, leading to errors.

Runtime errors

These errors occur during the execution of the test script and might or might not indicate a problem with the application. Here are some examples:

- Resource errors: Issues like insufficient memory, network connectivity problems, or database access errors can disrupt test execution.

- Environment errors: Inconsistencies between the development, testing, and deployment environments can cause tests to fail even though the application itself functions correctly.

- External dependency errors: Failures in external systems or services that the application relies on can lead to test failures.

Besides these errors, you might also encounter false positives. These are errors reported by the test script even though the application is functioning correctly. This can happen due to flaky tests (tests that intermittently pass or fail), timing issues, or environmental factors.

How are errors and bugs different?

People tend to use the terms error and bug interchangeably, though there are distinct differences. While errors are mistakes in the test script, bugs are actual problems in the code of the application under test.

- Errors are often easier to pinpoint as they cause the test script to fail with clear error messages.

- Bugs may be trickier to diagnose, requiring investigation into the application’s behavior. Read: Minimizing Risks: The Impact of Late Bug Detection.

Bugs can manifest in various ways:

- Functional bugs: Features not working as intended.

- Performance bugs: Slowness, crashes, or memory leaks.

- Security bugs: Vulnerabilities that compromise data or system integrity.

- Usability bugs: Issues that make the application difficult or frustrating to use.

Here’s an analogy to help you understand this better. Imagine a test script as a recipe for making a cake. Errors in the recipe (missing ingredients, wrong measurements) would be like errors in the test (syntax errors, bad locators). Bugs in the recipe (incorrect baking temperature, faulty oven) would be like bugs in the application (coding errors, design flaws).

Effective error-handling strategies for automated tests

In this section, we will look at the strategies that can be applied to handle errors in automated tests.

Solid test design

It is the foundation upon which your test scripts are built. It’s all about creating clear, concise, and maintainable tests that are less prone to errors from the get-go. You can do this by:

- Focusing on functionality: Well-designed tests target specific functionalities of the application under test. They avoid testing implementation details that might change without affecting the core functionality. This reduces the risk of errors due to code refactoring or minor UI adjustments. For example, instead of testing the exact color of a button, a well-designed test would verify that the button exists, is clickable, and leads to the expected outcome.

-

Clear and concise test steps: Use clear and unambiguous language in your test steps. This makes the test logic easier to understand and reduces the chance of misinterpretations that could lead to errors. For example, instead of saying “Click the button,” clearly state the button’s label or purpose (e.g., “Click the ‘Login’ button”).

- Independent test cases: Strive to create independent test cases that rely on minimal setup and teardown. This prevents errors caused by cascading failures, where one failing test disrupts subsequent tests due to dependencies. For example, design your tests so that each one can be executed independently without relying on the success of previous tests.

- Reusable components: Break down complex test logic into reusable components or helper functions. This promotes code reuse and reduces the chance of repetitive errors across multiple tests. For example, create a reusable function for common actions like logging in or navigating to specific pages.

- Validate expected behavior: Don’t just perform actions; actively verify the expected behavior of the application after each interaction. Use assertions to compare actual results with expected outcomes. This helps identify errors early on in the test execution. For example, After clicking a button, assert that a specific element appears on the screen or that a confirmation message is displayed.

- Maintainable tests: Write tests that are easy to understand, modify, and extend. Use proper naming conventions, comments, and documentation to improve maintainability and prevent errors caused by outdated or poorly documented tests. For example, use descriptive test names and comments to explain the purpose and logic behind each test case. Read: How to Write Maintainable Test Scripts: Tips and Tricks.

Robust test data management

Use fresh data sets for each test to avoid data contamination. Also, ensure the database is in a known state before and after tests by using fixtures or setup/teardown methods. You can then employ data-driven testing approaches where test data is externalized from the test script itself. This allows you to easily test different scenarios with various data sets and reduces the risk of errors due to hardcoded test data. For example, store login credentials or test data in a separate file (CSV, database) and reference them within your test scripts.

Smart locators

Smart locators are an advanced approach to identifying UI elements in automated tests. They aim to overcome the limitations of traditional locators (like IDs, names, or CSS selectors) that can become brittle and unreliable due to UI changes. Through smart locators, you can achieve the following:

-

Improved maintainability: Smart locators are designed to be more resilient to UI changes. They often rely on a combination of attributes instead of a single one, making them less prone to errors caused by minor UI adjustments. This reduces the need for frequent updates to your test scripts when the UI layout changes, minimizing maintenance overhead.For example, instead of using a flaky ID that might change in future updates, a smart locator could combine element text content with its parent element’s tag name for a more stable identification strategy.

-

Reduced false positives: Traditional locators can sometimes mistakenly identify the wrong element if multiple elements share similar attributes. Smart locators often incorporate logic to prioritize the most relevant element based on its position, context, or other factors. This reduces the occurrence of false positives (tests failing due to the script interacting with an unintended element) and leads to more reliable test results.For example, imagine a page with multiple buttons labeled “Submit.” A smart locator could consider the button’s surrounding text or its position within the form to ensure it interacts with the correct button. Read: Positive and Negative Testing: Key Scenarios, Differences, and Best Practices.

-

Self-healing capabilities: Some smart locators come with self-healing functionalities. These features can analyze past test executions and identify cases where locators may have become unreliable. The locator can then automatically update itself to reflect changes in the UI, preventing test failures. Read more about Self-healing Tests.For example, a testing framework might detect that a previously used locator for a button no longer works. The smart locator could automatically adjust its strategy based on the element’s new attributes, ensuring the test continues to function correctly.

-

AI-powered identification: Advanced smart locators utilize artificial intelligence (AI) techniques like image recognition or computer vision. This allows them to identify elements based on their visual characteristics, regardless of the underlying HTML structure. This approach is particularly beneficial for complex UIs or web applications with dynamic content that might be difficult to target with traditional locators.For example, an AI-powered smart locator could identify a specific button based on its unique icon, even if the button’s text or other attributes change in future updates. Read: AI In Software Testing.

-

Accessibility considerations: Some smart locators integrate accessibility best practices by prioritizing attributes used by screen readers or assistive technologies. This ensures your tests can identify elements even if the UI is not visually rendered in the same way.For example, a smart locator could prioritize ARIA attributes (used to describe UI elements for assistive technologies) for identification, making your tests more inclusive and reliable.

Synchronization

In automated testing, synchronization refers to ensuring that your test scripts wait for the web application or system under test (SUT) to reach a specific state before interacting with UI elements. This is crucial to prevent errors caused by timing issues.

Common synchronization techniques include:

- Implicit waits: These waits instruct the test script to wait for a certain amount of time (e.g., 10 seconds) before proceeding. This is a simple approach but can be inefficient if the element loads faster or slower than the specified time.

- Explicit waits: These waits involve checking for specific conditions before proceeding. For example, waiting for an element to be clickable or visible ensures the element is ready for interaction. This is a more targeted approach that avoids unnecessary delays.

- Wait for JavaScript execution to complete: Some frameworks offer functionalities to wait for asynchronous JavaScript operations to finish before continuing. This ensures the test script interacts with the application after the data has been fetched or processed.

- Page Object Model (POM) design pattern: This design pattern encourages creating reusable page objects that encapsulate UI elements and actions. Synchronization logic can be integrated within these page objects, ensuring proper waits before interacting with elements.

Test Environment Validation

Test environment validation refers to the process of verifying that the testing environment (hardware, software, configuration) accurately reflects the production environment or the environment where the application will be deployed. This is crucial to prevent errors caused by inconsistencies between the environments and ensure that your tests accurately represent real-world behavior.

You can use these techniques to validate your test environment:

- Configuration management tools: Utilize tools that help manage and compare configurations across different environments. This allows you to identify discrepancies and ensure consistency.

- Automated environment checks: Implement automated scripts or tools that can verify the state of the test environment (e.g., database connectivity, application version) before running tests.

- Manual reviews: Conduct periodic manual reviews of the test environment to verify its configuration and data accuracy compared to the production environment.

- Version control systems: Use version control systems for configuration files and test data to track changes and ensure rollbacks if necessary.

- Monitoring and logging: Implement monitoring tools to track resource usage and performance metrics within the test environment. Additionally, logging environment details during test execution can help identify potential issues during troubleshooting. Read more in this Test Log Tutorial.

Exception handling

Exception handling is a strategy for gracefully managing unexpected errors or exceptions that might occur during test execution. While it does not directly prevent errors, it helps you deal with them effectively, gather valuable information, and ensure your tests continue execution whenever possible.

Here are some common exception-handling techniques:

- Try-catch blocks (programming language specific): By using try-catch blocks, you can wrap critical sections of your test script within a “try” block. If an error (exception) occurs within this block, a “catch” block is triggered, allowing you to capture the error details. This helps isolate the source of the error and prevents the entire test from crashing.

- Assertions with error messages: Utilize assertions within your tests and provide informative error messages when they fail. This helps pinpoint the location of the issue within the test logic.

- Logging mechanisms: Implement logging functionality to capture error details, stack traces, and relevant test data during exception handling. This information is essential for debugging and analysis.

Error reporting

Design your tests to report errors clearly and concisely. The information in the error message is crucial for identifying the root cause of test failures, debugging issues within the application or test scripts, and ultimately preventing similar errors from occurring in the future.

Effective error reporting goes beyond simply stating that a test case failed. It should capture detailed error messages associated with the failure. These messages should provide specific information about the nature of the error, such as:

- Exception types (e.g., “Element not found,” “Assertion error”)

- Stack traces (sequence of function calls leading to the error)

- Line numbers in the test script where the error occurred

- Any additional context relevant to the error (e.g., failed assertion message)

For example, instead of a generic “Test failed” message, the error report might show: “AssertionError: Expected button text to be ‘Submit’ but found ‘Save’.” This detailed information pinpoints the specific assertion that failed and allows for easier debugging.

Error reports should be well-organized and easy to understand. They should clearly indicate which test cases failed, the associated errors, and any relevant screenshots or logs. This allows developers and testers to quickly identify and address the root causes of the errors.

Common errors in automated tests and their solutions

Here are some common errors that you might encounter when working with automated tests:

Assertion failures

These occur when the actual result of a test does not match the expected result.

You can try to:

- Ensure that the expected results are correct.

- Validate if the system under test (SUT) is in the correct state.

- Use descriptive error messages to clarify what failed and why.

Timeout errors

They occur when a test exceeds the maximum allowed time for execution.

You can try to:

- Increase the timeout duration if it’s too short.

- Optimize the test to run faster.

- Ensure the environment is not causing unnecessary delays.

Element not found errors

It happens when a UI element that a test tries to interact with is not found.

You can try to:

- Verify the element’s locator (ID, class, XPath, etc.).

- Ensure the page is fully loaded before interacting.

- Add waits or retries for dynamic elements.

Data-related errors

Occurs due to incorrect or inconsistent test data.

You can try to:

- Use fresh data for each test.

- Clean up test data before and after test execution.

- Use mock or fixture data where possible.

Dependency errors

This occurs when tests depend on external systems or tests.

You can try to:

- Mock external dependencies.

- Ensure tests are independent and can run in isolation.

- Use setup and teardown methods to manage dependencies.

Environment-specific errors

Errors that occur due to differences in test environments (e.g., development vs. production).

You can try to:

- Ensure the test environment closely matches the production environment.

- Use configuration files to manage environment-specific settings.

- Implement environment checks in tests.

Syntax and code errors

Errors due to mistakes in the test code itself (e.g., syntax errors, typos).

You can try to:

- Use linters and code analysis tools to catch syntax errors.

- Review and test the test code itself.

- Implement code reviews and pair programming.

Effective error handling with testRigor

Most legacy test automation tools will give you these errors because they involve methods like coding test scripts and using traditional locators to create automated tests. What you need is a new-age tool that rids you of this dependency on the implementation details of the SUT while also being easy to use. With a modern test automation tool like testRigor, you can easily achieve this and a lot with its powerful features.

testRigor wields the power of generative AI to make sure that you are able to use smart locators and write your tests in the simplest way possible – in plain English. This eliminates a lot of the above-mentioned problems like syntax errors, locator errors, and logical errors and makes the process of creating and collaborating easier. Know How to add waits using testRigor in plain English?

There are built-in provisions to carry out data-driven testing with testRigor, which is a great way to ensure that your test scripts are light and concise. The tool offers a clean and easy-to-understand UI to write test scripts and configure the test environment. Even if you do encounter errors, the report that testRigor offers is very easy to interpret. Every test run is tagged with a test execution video, screenshots at every step, and simple English explanations for what went wrong.

With testRigor taking care of so much for you, you can focus on covering tests across various platforms and browsers with this single tool.

Conclusion

Errors are important as they show us areas for improvement. But this holds good for the application under test and is not desirable in the test scripts that are meant to do this job. Through the above strategies, you can ensure that you focus on what really matters, that is, improving the quality of your application, rather than wasting time on fixing test scripts. Use well-rounded tools that aid your QA endeavors rather than hinder them.

FAQs

What is the importance of error handling in automated tests?

Error handling in automated tests is important for identifying issues promptly, reducing false positives/negatives, maintaining the reliability of the test suite, and ensuring that the system under test performs as expected. Effective error handling helps in diagnosing the root cause of failures quickly, thereby saving time and resources.

How can I categorize different types of automated test errors?

Automated test errors can be categorized into:

- Test failures: When the system under test does not meet the expected outcome.

- Test errors: When the test code itself has issues, such as bugs or environmental problems.

- Flaky tests: Tests that pass and fail intermittently without any code changes.

What are some common strategies for handling flaky tests?

Strategies for handling flaky tests include:

- Implementing retry logic to rerun the test if it fails initially.

- Using waits or delays for dynamic elements in UI tests.

- Ensuring tests are isolated and independent.

- Regularly reviewing and refactoring flaky tests to improve stability.

What is the role of timeout settings in automated tests?

Timeout settings prevent tests from hanging indefinitely by specifying a maximum duration for test execution. If a test exceeds this duration, it fails with a timeout error. Adjusting timeout settings can help balance allowing enough time for legitimate operations and catching performance issues or hangs. Know more:

- How to Resolve TimeoutException in Selenium

- Cypress Timeout Error: Understanding and Overcoming Cypress Delays

- How to Handle TimeoutException in Playwright?

How often should automated tests be reviewed and maintained?

Automated tests should be reviewed and maintained regularly, ideally:

- After each significant code change, ensure tests are still relevant and accurate.

- Periodically (e.g., quarterly) to remove outdated or redundant tests and update existing ones.

- Whenever new features are added, ensure they are covered by tests and integrated seamlessly.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |