AI Agents in Software Testing

|

|

We are seeing a revolution in our world today: Artificial Intelligence. It is so powerful that every aspect of our lives is touched by its magic. Currently, AI agents are everyone’s field of interest based on their capabilities and the significant changes they offer.

So, what exactly is an AI agent, and what does it do? Let us start with a simple definition: In AI, an intelligent agent is like a smart assistant that understands its surroundings and makes decisions autonomously to reach its objectives. They can think and work independently after they receive a goal to achieve.

These agents can be as simple as room temperature control or as complex as humanoids or Mars rovers. In this article, we will learn about the role of AI agents in software testing. We will discuss their features, types, and applications, specifically their use in software testing.

| Key Takeaways: |

|---|

|

What is an AI Agent?

AI agents aim to fulfill specific goals, depicted by an “objective function” that represents these goals. Once they have the goal, the agents will create the task list and start working on it using data learning, pattern recognition, making decisions, and moving towards achieving specific goals.

For instance, reinforcement learning agents use a “reward function” to guide them toward desired actions. These intelligent agents are vital subjects in AI, economics, and cognitive science. They represent anything from individual programs to complex systems operating without any human intervention.

Recent developments in generative AI allow AI to understand human languages. Therefore, AI agents are the connectors that weave AI into our real world and allow it to execute required actions autonomously.

Read: AI Assistants vs AI Agents: How to Test?

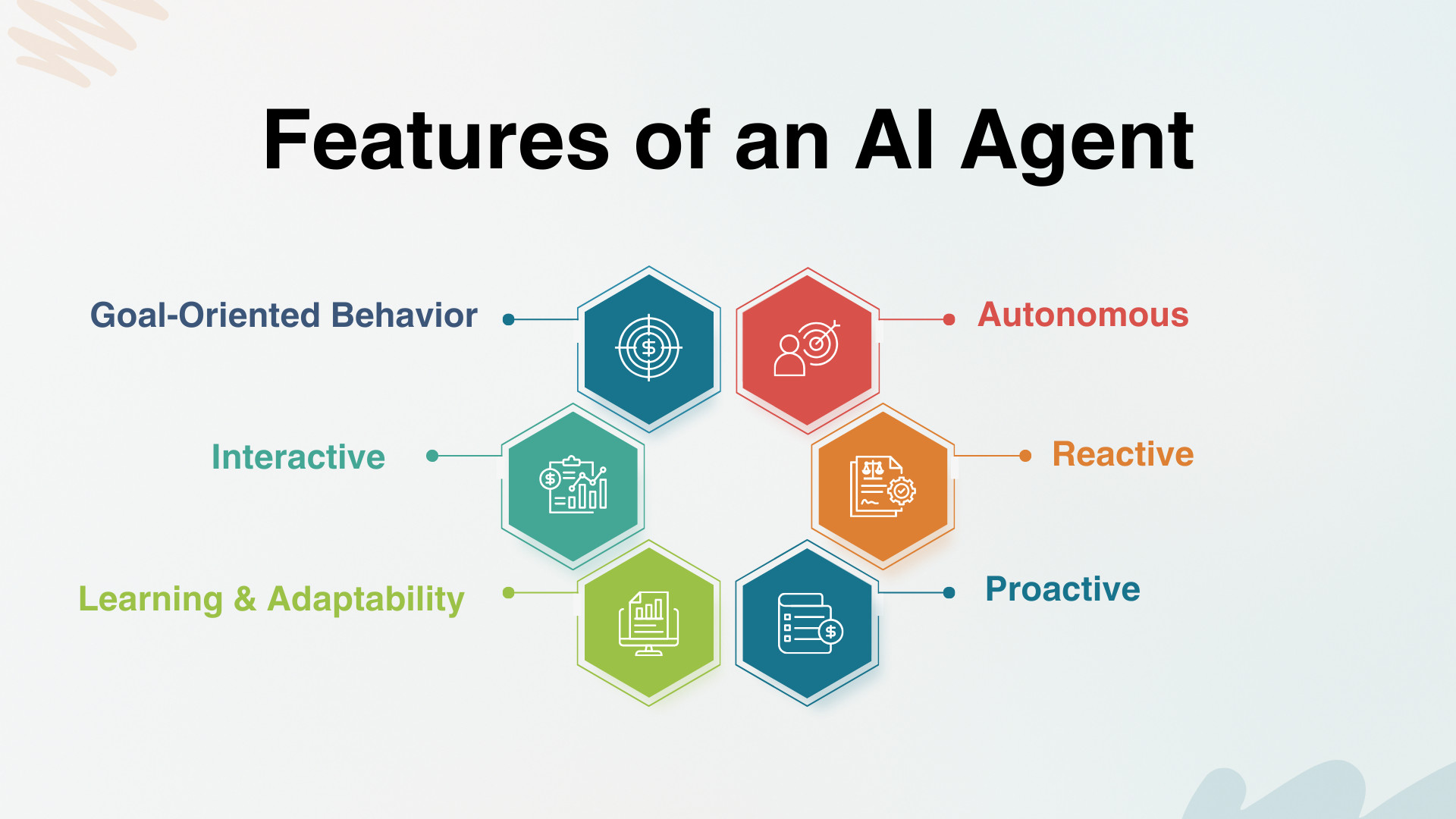

Features of an AI Agent

Below are the prominent features of an AI agent:

-

Autonomous: These AI agents can work independently without any human intervention using their programs and data.Example: Thermostats like Nest autonomously adjust the temperature, turn off when the house is empty, and work on a schedule.

-

Reactive: They respond based on their environment and their perception. They can change their response according to the changes in the environment.Example: A chatbot on a banking website responds based on the queries asked. It provides relevant information based on its input and, if required, forwards the query to a human assistant.

-

Proactive: They are a step ahead of reactive AI agents and take proactive initiatives as well. They can plan and execute the actions to achieve their objectives.Example: An AI agent working for inventory management foresees that the stock will run low in the next two days based on the current order size. So, it automatically places new orders with the suppliers to refill the stock back. Read: Active Testing vs. Passive Testing.

-

Interactive: They are good at communicating with fellow AI agents and humans. They interact to find possible solutions to a problem or to complete their tasks.Example: Sophia is a humanoid robot developed by Hanson Robotics that can converse with humans, provide expressions, and change the responses based on the interaction.

-

Learning & Adaptability: AI agents can improve their performance over time by learning from past experiences, feedback, or new data. This allows them to adapt to changing environments and evolving requirements.Example: A recommendation AI on an e-commerce platform refines product suggestions based on user browsing history, purchases, and feedback, becoming more accurate with repeated interactions.

-

Goal-Oriented Behavior: AI agents are designed to work toward specific goals or objectives and can evaluate multiple action paths to determine the best course of action.Example: A route-planning AI in logistics evaluates traffic, weather, fuel cost, and delivery deadlines to choose the most efficient delivery route.

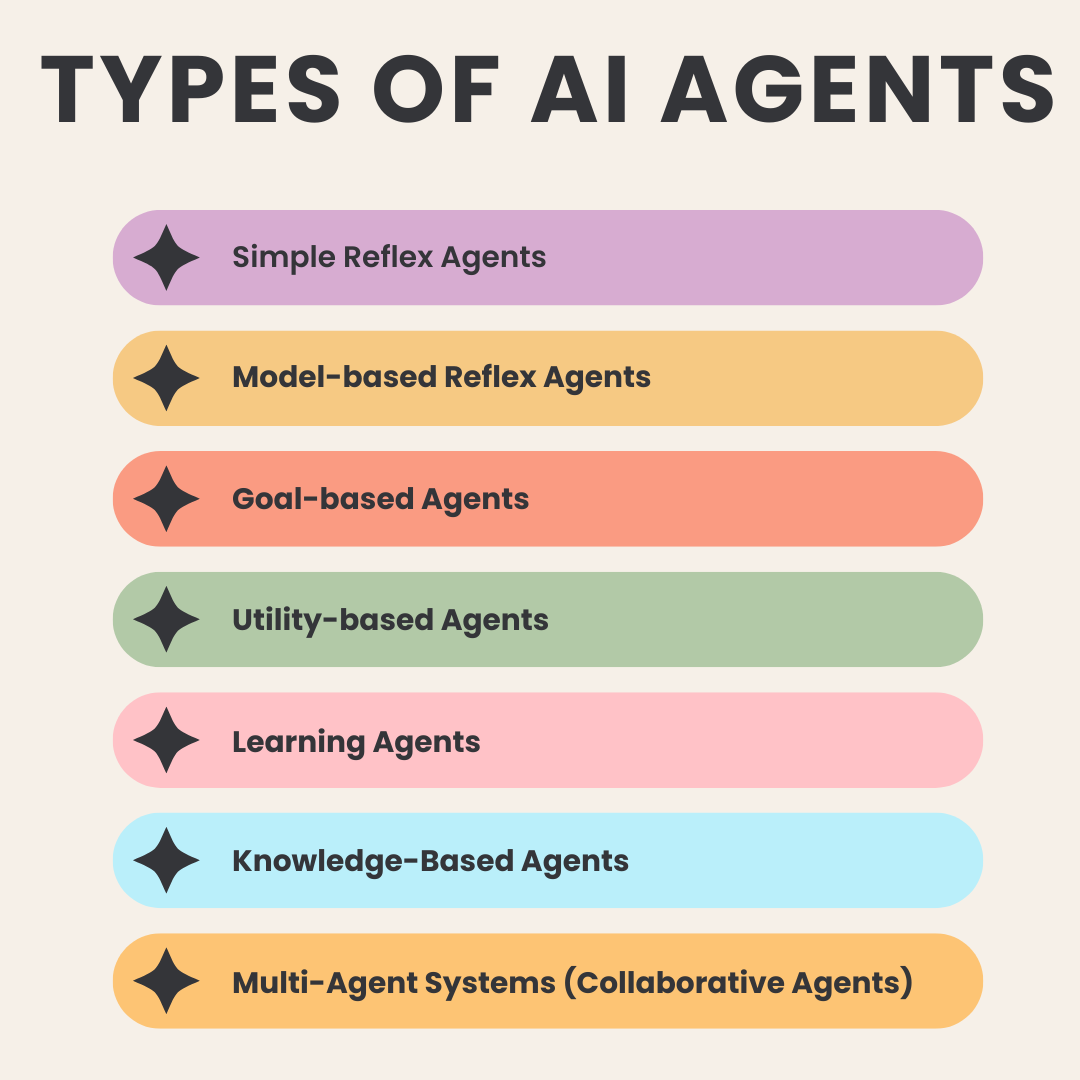

Types of AI Agents

AI agents can be divided into different categories:

-

Simple Reflex Agents: They are built with basic reflexes and function based on the current perceptions (environment). They do not consider past perceptions and base their actions/decisions on current events only. These agents are not able to adjust to environmental changes.Example: The spam filter in your email inbox scans the incoming emails based on the spam keywords and immediately moves them to the ‘Spam’ folder without considering any other context or historical perceptions.

-

Model-based Reflex Agents: They maintain an internal model to have information about the world’s operations. They make informed decisions based on the model’s historical perceptions and current environment.Example: An intelligent air-conditioner uses the current room temperature (environment) and historical perceptions such as time of day and temperature choices.

-

Goal-based Agents: They work based on the current state and the goal that they have to achieve. Goal-based agents choose the best path from several options based on the specific goal/objective (state).Example: Consider a Mars rover exploring the surface of Mars. It decides where to go next based on its objective, i.e., to collect soil samples that previously contained water.

-

Utility-based Agents: They are a level higher than goal-based agents in that they measure all potential outcomes. This evaluation through a ‘Utility Function’ allows them to choose the best actions to maximize utility.Example: A personal finance app that measures and suggests the best way to allocate your monthly savings across different investment options (stocks, bonds, savings accounts). It chooses the best actions to maximize your expected financial return based on your risk preference.

-

Learning Agents: These agents use machine learning to use past experiences and improve themselves over time. They are intelligent enough to learn, evaluate performance, and find new ways to improve it.Example: The most common example is DeepMind’s AlphaGo, which learned to play the board game Go at a world champion level. It was trained on thousands of human and computer-generated games and continuously learned and improved its performance.

-

Knowledge-Based Agents: These agents rely on a structured knowledge base (facts, rules, and relationships) and use inference to make decisions.Example: A medical diagnosis AI uses a medical knowledge base of symptoms, diseases, and treatments to suggest possible diagnoses.

-

Multi-Agent Systems (Collaborative Agents): These systems consist of multiple AI agents working together, either cooperatively or competitively, to solve complex problems.Example: Autonomous drones coordinating with each other during disaster relief to map affected areas and locate survivors.

Read: Machine Learning Models Testing Strategies.

Agentic Testing vs. Traditional Automation

As software systems become more complex and release cycles accelerate, traditional test automation is starting to show its limits. Teams are now exploring agentic testing, where AI agents actively reason, decide, and adapt during testing instead of merely executing pre-scripted steps.

| Aspect | Traditional Automation | AI Agents (Agentic Testing) |

|---|---|---|

| Test Creation | Manual scripting using frameworks and code | Autonomous or prompt-driven test creation |

| Maintenance | High effort due to UI and logic changes | Self-healing tests adapt automatically |

| Decision-making | Fully human-driven logic and flows | Agent-driven reasoning and decisions |

| Risk Prioritization | Static, rule-based prioritization | Dynamic and predictive risk assessment |

| Coverage Strategy | Predefined and limited by scripts | Continuously evolving and adaptive |

| Skill Barrier | High – requires strong coding and framework expertise | Low – shared ownership across QA, Dev, and Product |

Applications of AI Agents

Here are the common applications of AI agents in the real world:

- Personal Assistants: We all use assistants such as Siri, Google Assistant, and Alexa to set reminders, make calls, manage smart homes, get answers, and shop.

- Autonomous Vehicles: These agents perceive the environment and make decisions to reach the desired destination. Waymo’s self-driving cars use a combination of sensors, cameras, and artificial intelligence to navigate roads, recognize traffic signals, and make driving decisions.

- Healthcare Systems: AI agents are being used for diagnosis support, personalized treatment recommendations, and patient monitoring. One example is IBM Watson, which can analyze the meaning and context of structured and unstructured data in clinical notes. It then reports and assists doctors with diagnoses and treatment options.

- Finance and Banking: AI agents are helpful in fraud detection, customer support, and algorithmic trading. For example, AI agents analyze market conditions across multiple securities during trading. Based on complex mathematical models, they execute trades at the best times to maximize profit or minimize loss.

- Recommendation Systems: They are widely used by platforms like Netflix and Amazon to recommend movies or products based on user preferences and behavior.

- Software Testing and Quality Assurance: AI agents automate test creation, execution, and failure analysis. Modern testing agents generate tests in plain English, adapt to UI changes, and identify flaky tests with minimal maintenance.

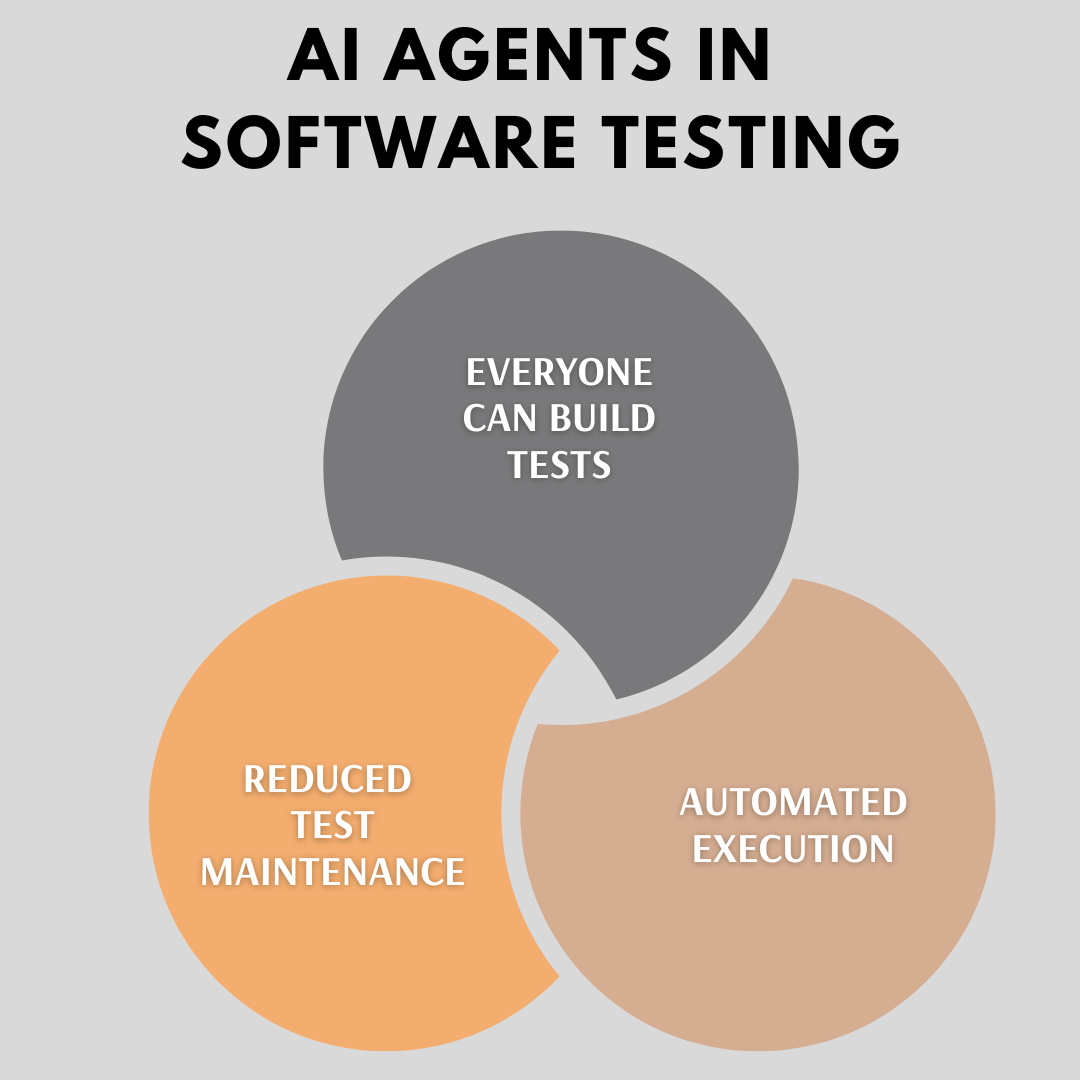

AI Agents in Software Testing

Here, we discuss the top applications of AI agents in software testing and how their use can uplift the whole scenario. It is helpful to have AI agents run the tests since they bring three main benefits to the software testing process:

- Everyone can Build Tests: They enable anyone in the team to develop and maintain tests, irrespective of their technical expertise. If you do not know coding, you can still create robust and stable test scripts in plain English. Read: Creating Your First Codeless Test Cases.

- Automated Execution: These AI agents can automatically execute manual test cases without human intervention. Read: How to execute test cases in parallel in testRigor?

- Reduced Test Maintenance: Since the description is high-level (natural language), these AI agents avoid any reliance on implementation details. This feature significantly reduces test maintenance. Read: Decrease Test Maintenance Time by 99.5% with testRigor.

Let us learn about AI agents’ applications in software testing.

Automated Test Case Generation

Intelligent AI agents can automatically generate test cases based on the requirements and specifications of the software. They have the help of the following capabilities to do so:

Natural Language Understanding (NLU): Regarding software QA, an AI Agent is a system that understands natural language as a description of what needs to be done and can execute that description as a test. The keyword here is “execute”. Read: Natural Language Processing for Software Testing

Let us consider, for an e-commerce website, you provide a prompt to the AI: “find and add a Kindle to the shopping cart”. It should execute a sequence of steps, allowing the user to find and then add a Kindle to the cart. Those actions might include entering a Kindle into the search, clicking on a specific product, and clicking the “Add to cart” button.

find and select a kindle add it to the shopping cart proceed to the cart check that page contains “Kindle”

It would be an executable test that validates the ability of the website to add a product to a shopping cart. Here is a video to learn more about how to use testRigor’s generative AI for software testing.

testRigor is the #1 most advanced AI agent for software testing, which works on two levels:

- Level 1: On the top level, it uses Generative AI to allow you to use any prompts in your own language.

- Level 2: It then gets to the lower level, writing the actions in plain English using a more specific format described in this documentation. Because all prompts at the time of execution are represented in the form of specific actions in English, it is easy to correct the action if AI hallucinates and does not do what you would expect it to do for a particular prompt.

Automation in Test Execution

AI agents in software testing run the test cases automatically without needing any manual intervention. The test suites are triggered automatically when code changes are pushed into the repository. Also, the integrations with defect management systems allow defects to be raised and reports to be automatically shared with stakeholders. These agents can run the test cases 24/7 during parallel testing to achieve excellent test coverage without manual assistance.

Adaptive Test Scripts

AI agents like testRigor exhibit self-healing capabilities, where test scripts automatically adapt to application UI or API changes without human intervention. This reduces the maintenance overhead of test scripts to a great extent and improves the automated tests over time.

For instance, if a login button is changed from <button> tag to <a> tag, testRigor, as an AI agent, would care how the button is rendered and understand the button’s role within the application context.

Shift-Everywhere Testing

AI is vital in shift-everywhere testing, where testing activities happen continuously across development, testing, deployment, and production. AI agents assist developers and testers by providing real-time feedback on code changes, suggesting the right tests to run, and identifying potential defects at every stage.

DevTestOps, test scalability, and TestOps, powered by AI agents, enable scalable and always-on quality practices. This approach helps teams deliver faster while maintaining high and consistent quality. Quality becomes a shared responsibility, guided by intelligent, data-driven decisions throughout the lifecycle. Read: Shift Everywhere in Software Testing: The Future with AI and DevOps.

Test Optimization

You can use AI agents to analyze historical test data and identify areas of the software that are more prone to defects. These areas might require more intensive testing. This can help optimize the test suite, ensuring that testing efforts focus on the most critical and high-risk areas. During test optimization, redundant or irrelevant tests are also removed from the test suite to save testing time.

Visual Testing

AI techniques, especially those using machine learning and computer vision, can automate the process of identifying visual discrepancies in the UI of an application across different devices and screen sizes. You can automatically detect visual defects in web and mobile applications by comparing screenshots against a baseline. This helps in identifying visual regressions that might not be caught by traditional functional testing.

compare screen

compare screen to stored value "Saved Screenshot"

Read: How to do visual testing using testRigor?

Self-learning and Predictive Analysis

Over time, AI agents can learn from the outcomes of past testing efforts to improve their accuracy and efficiency, reducing the manual effort required for testing. AI can analyze trends from past testing cycles to predict future test outcomes. This helps teams to anticipate and mitigate risks before they become critical.

Performance and Security Analysis

AI agents can monitor application performance under test to identify bottlenecks and optimize for better performance. By analyzing code and identifying patterns that may lead to vulnerabilities, AI agents can enhance the effectiveness of security testing.

AI Agents as First-Class QA Team Members

In modern QA organizations, AI agents should be viewed as roles within the quality system, not just tools that execute automation. Each agent operates with a clear responsibility, collaborates with humans and other agents, and contributes to quality governance rather than simple test execution.

| AI Agent Role | Primary Responsibility |

|---|---|

| Test Design Agent | Converts requirements, pull requests, Figma designs, and tickets into meaningful test scenarios |

| Risk Analysis Agent | Analyzes code commits, change impact, and defect history to prioritize what must be tested first |

| Execution Agent | Decides which environments to use, how much parallelism is needed, and when tests should run |

| Failure Triage Agent | Clusters failures, identifies likely root causes, and assigns ownership to the right teams |

| Coverage Gap Agent | Detects untested user flows and scenarios using production usage and behavior data |

| Release Gatekeeper Agent | Makes go or no-go decisions based on risk signals and confidence, not raw pass percentages |

By defining AI agents as first-class QA team members, testing evolves from task execution into an intelligent, continuously governed quality process where decisions are driven by risk, context, and real system behavior.

Our Future With AI Agents

Here is a glimpse of our software testing future with AI Agents:

-

Collaborative AI Agents: Future developments may introduce collaborative AI agents. They could work together or with human testers to share insights, learn from each other’s findings, and collectively improve the testing process.Multiple AI agents, each specializing in different aspects of testing (e.g., UI, performance, security), could work together on a complex application. These agents could share insights, such as a performance issue detected by one agent. Then, it could inform security and UI testing strategies to change their approach. This collaboration leads to a more comprehensive testing approach.

-

Assisting Exploratory Testing: AI can assist in exploratory testing by suggesting scenarios to explore based on the application’s complexity, past issues, and areas not covered by existing tests. This helps testers to focus their exploratory efforts more effectively and uncover hidden issues.For example, if a new payment gateway integration is added to an application, the AI could suggest exploratory tests around payment processing, error handling, and user notifications.

-

Personalized Testing: In the future, AI agents can create specific testing strategies based on the application’s usage patterns, user feedback, and performance metrics. This means tests will be more user-centric, focused on user experience, and ensuring high-quality releases.For example, based on user interaction data, an AI agent identifies that users face issues with a mobile app’s photo upload feature during low network availability. The AI then prioritizes testing this feature under various network simulations. This helps push future releases to address this user pain point quickly.

-

Autonomous Defect Root Cause Analysis: Future AI agents will not only detect test failures but also identify the root cause by correlating logs, code changes, environment data, and historical defects. This will significantly reduce the time testers and developers spend investigating failures.For example, when a regression test fails after a deployment, the AI agent traces the failure back to a recent backend API change rather than blaming the UI, allowing teams to fix the issue faster.

-

Self-Evolving Test Suites: AI agents will continuously analyze application changes, production incidents, and user behavior to automatically update and evolve test suites. Instead of manually maintaining tests, AI agents will detect when tests become outdated and modify them to reflect new workflows, UI changes, or business logic.For instance, if a checkout flow changes due to a new discount feature, the AI agent will recognize the altered user journey and update existing tests or generate new ones without human intervention.

-

Ethical and Responsible AI Testing: As AI-powered features become common, AI agents will test other AI systems for bias, fairness, explainability, and compliance. For example, an AI testing agent evaluates a recommendation algorithm to ensure it does not unfairly favor or disadvantage specific user groups.

Multi-Agent Architectures in QA Platforms

Modern QA platforms are increasingly built on multi-agent architectures, where responsibility is distributed across multiple specialized AI agents instead of relying on a single, monolithic intelligence. In this model, one agent does not do everything. Agents are designed with clear, focused roles such as risk analysis, test generation, execution orchestration, or defect triage, and they coordinate with each other using shared context and events. This specialization makes the system more scalable, explainable, and resilient.

A key advantage of this approach is the creation of event-driven quality pipelines that react automatically to changes across the software lifecycle. When code is pushed, a risk analysis agent is triggered to assess change impact and historical failure patterns. If risk is detected, an execution agent dynamically scales and prioritizes the most relevant tests. When failures occur, a triage agent classifies issues, separates real defects from noise, and routes insights to the appropriate teams.

This coordinated, agent-driven flow closely mirrors how modern AI-native QA platforms are being designed, transforming quality engineering into an intelligent, self-adapting system.

Testing AI Systems with AI Agents

As AI-powered systems become more common, testing the AI itself becomes essential because traditional automation is designed for deterministic behavior, not probabilistic outcomes.

- Hallucination detection testing uses AI agents to repeatedly probe models with varied and adversarial prompts to identify confident but incorrect or fabricated responses.

- Prompt robustness testing evaluates how small changes in prompt wording or structure affect output stability, safety, and correctness.

- Bias and fairness validation leverages AI agents to test responses across diverse demographics, contexts, and linguistic variations to uncover hidden bias.

- Non-determinism testing measures output variability across repeated executions to ensure results stay within acceptable quality and risk thresholds.

- Model drift detection continuously compares current model behavior with historical baselines to detect unintended changes over time.

Read: How to use AI to test AI.

Limitations and Guardrails of AI Agents

| Focus | Limitation / Risk | Required Guardrail |

|---|---|---|

| Test intent | AI agents may hallucinate test scenarios that look correct but do not match real business intent | Human validation of critical test objectives and acceptance criteria |

| Generalization | Agents can overgeneralize patterns across contexts where they do not apply | Context-aware constraints and domain-specific rules |

| Test coverage | AI-generated coverage can create false confidence without meaningful validation | Risk-based coverage review and quality thresholds |

| Decision authority | Fully autonomous agents may make unchecked quality decisions | Human-in-the-loop governance for approvals and overrides |

| Explainability | AI-driven test selection and prioritization may be opaque | Transparent reasoning, traceability, and explainable test decisions |

Conclusion

“In the race for quality, there is no finish line” – David T. Kearns.

AI agents like testRigor uplift software tests by automating repetitive tasks, providing more test coverage, and helping detect defects quickly using plain English. These agents can effortlessly optimize the overall testing process through generative AI and NLP.

As AI technology evolves over time, these agents are expected to become even more sophisticated and intelligent. This will further enhance their capabilities and applications in software testing. The trend has just started, setting a solid base for better quality, faster delivery, and minimum effort. The future looks promising and bright with these intelligent AI agents.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |