Big Data Testing: Complete Guide

|

|

With the rise of the internet, social media, and digital devices, there’s a boom in data generation around us. Data has started coming from beyond traditional sources like spreadsheets. Think sensor data from IoT devices, social media feeds, and customer clickstreams.

Businesses need more than basic summaries. They crave deeper insights to understand customer behavior, optimize operations, and identify new opportunities. Traditional data tools were designed for smaller, structured datasets. Big data necessitated new technologies and approaches to capture, store, process, and analyze this vast and varied information. Big data fills the gap, allowing us to harness the power of this data deluge.

What is big data?

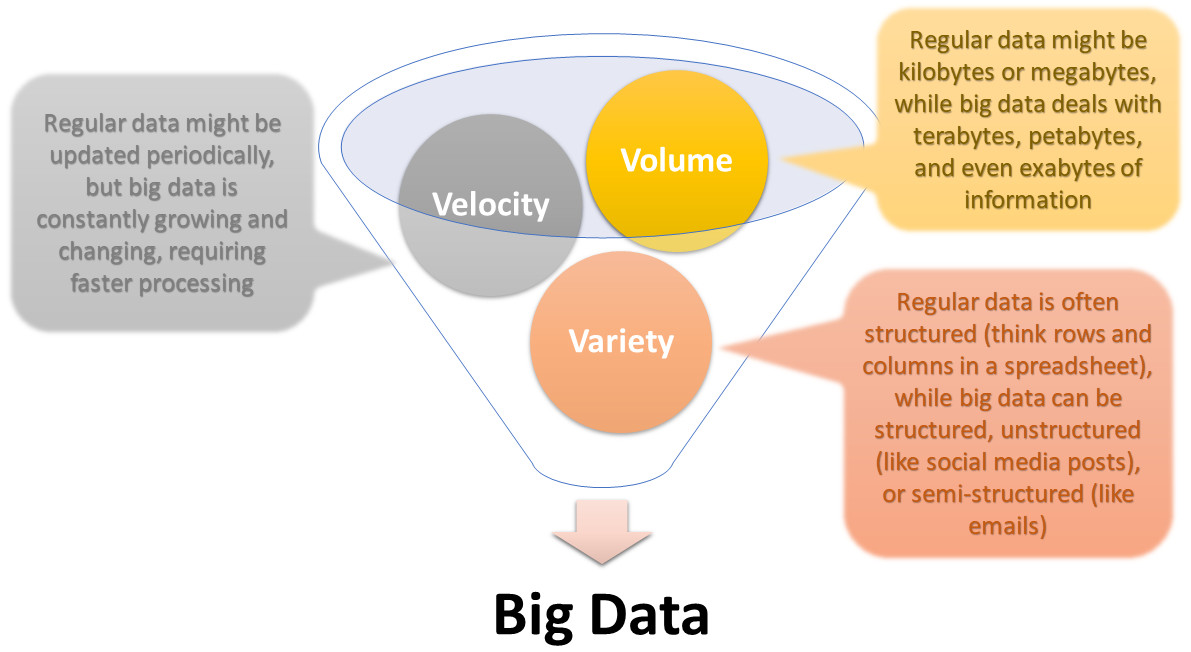

“Big Data” refers to extremely large datasets that cannot be effectively processed with traditional data processing techniques due to their volume, velocity, and variety. It’s different from regular data, primarily in scale and complexity.

3 V’s of big data

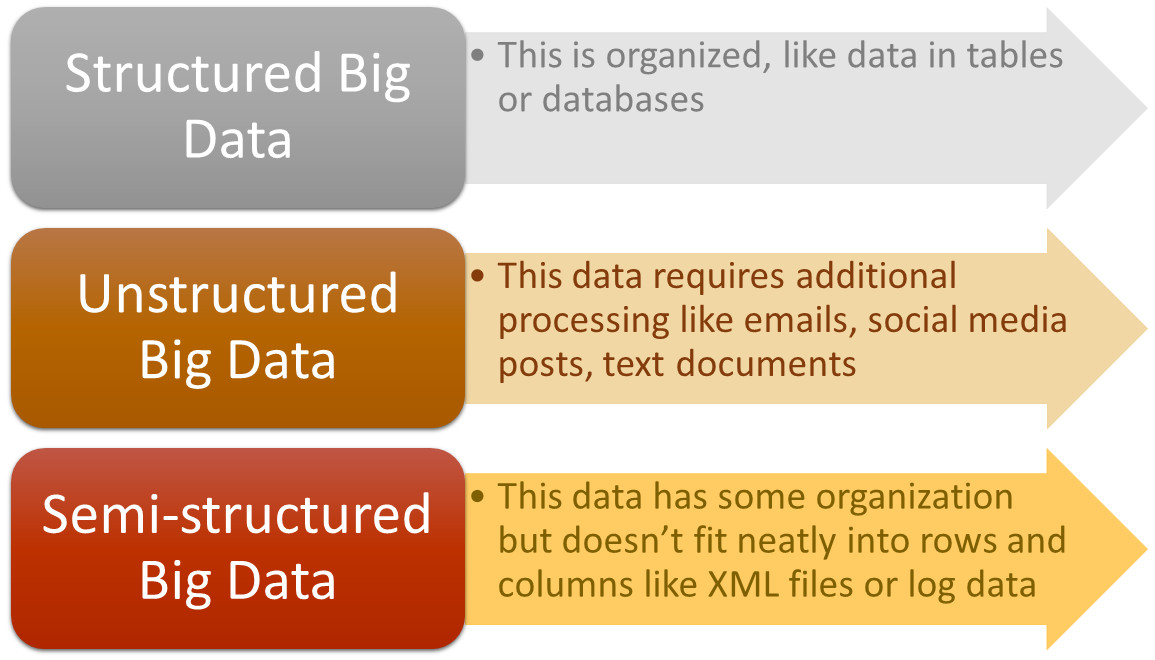

Types of Big Data

What is big data testing?

Big data testing primarily focuses on how well the application handles the big data. It ensures the application can correctly:

- Ingest the big data from various sources (like social media feeds and sensor data) despite its volume, variety, and velocity.

- Process the data efficiently, extracting meaningful insights without getting bogged down by the massive size.

- Store the data reliably and securely, ensuring its accuracy and accessibility for future analysis.

- Output the results in a usable format, providing valuable insights to users without errors or inconsistencies.

The goal is not only to ensure that the data is of high quality and integrity but also that the applications processing the data can do so efficiently and accurately.

Simple analogy

Imagine a chef preparing a giant pot of soup (big data). Testing the soup itself might involve checking for the freshness of ingredients (data quality). But big data testing is more like ensuring the chef has the right tools (application) to handle the large volume, maintain consistent flavors (data processing), and serve the soup efficiently (application performance).

So, you can say that big data testing is about making sure the application is a skilled chef who can work effectively in a big kitchen (big data environment).

How is big data testing different from data-driven testing?

The following table shows how these forms of testing vary.

| Parameter | Big Data Testing | Data-driven Testing |

| Focus | Focuses on ensuring big data applications function correctly and efficiently when dealing with massive, varied, and fast-flowing data (volume, variety, velocity). It tests how the application interacts with big data throughout its lifecycle (ingestion, processing, storage, output). | Focuses on automating test cases using external data sources (spreadsheets, databases) to improve test coverage and efficiency. It tests the application’s functionality with various sets of input data to identify edge cases and ensure the application works as expected under different conditions. |

| Data characteristics | Deals with massive datasets that traditional testing tools can’t handle. It considers the unique challenges of big data like volume, variety, and velocity. | Typically uses smaller, structured data sets to automate test cases. The data itself is not the main focus; it’s a means to efficiently execute numerous test cases. |

| Tools and techniques | Often utilizes specialized tools and techniques designed to handle the scale and complexity of big data. These tools can handle data subsetting (using a smaller portion for testing) or data anonymization (removing sensitive information) to manage the data volume. | Leverages existing testing frameworks and tools with external data sources. The focus is on reusability and automating repetitive test cases with different data sets. |

| Example | Imagine testing a retail application that analyzes customer purchase data from millions of transactions daily. Big data testing would ensure the application can efficiently ingest, process, and analyze this massive data stream to identify buying trends. | Imagine testing a login functionality. Data-driven testing could use a spreadsheet with various usernames and passwords (valid, invalid, empty) to automate login tests and ensure the application handles different scenarios correctly. |

Components of big data testing

Big data testing goes beyond just the data itself and focuses on how well the application interacts with the big data. The primary components are:

- Test Data: Representative sample data mimicking real-world big data is used for testing scenarios. This data should reflect the actual data’s volume, variety, and velocity to ensure realistic testing. Techniques like data subsetting or anonymization can be used to manage the scale of test data. Read: Optimizing Software Testing with Effective Test Data Management Tools.

- Test Environment: A dedicated environment replicating the production setup is crucial. This environment should have sufficient resources like storage, processing power, and network bandwidth to handle the demands of testing big data applications. Read: What is a Test Environment? A Quick-Start Guide.

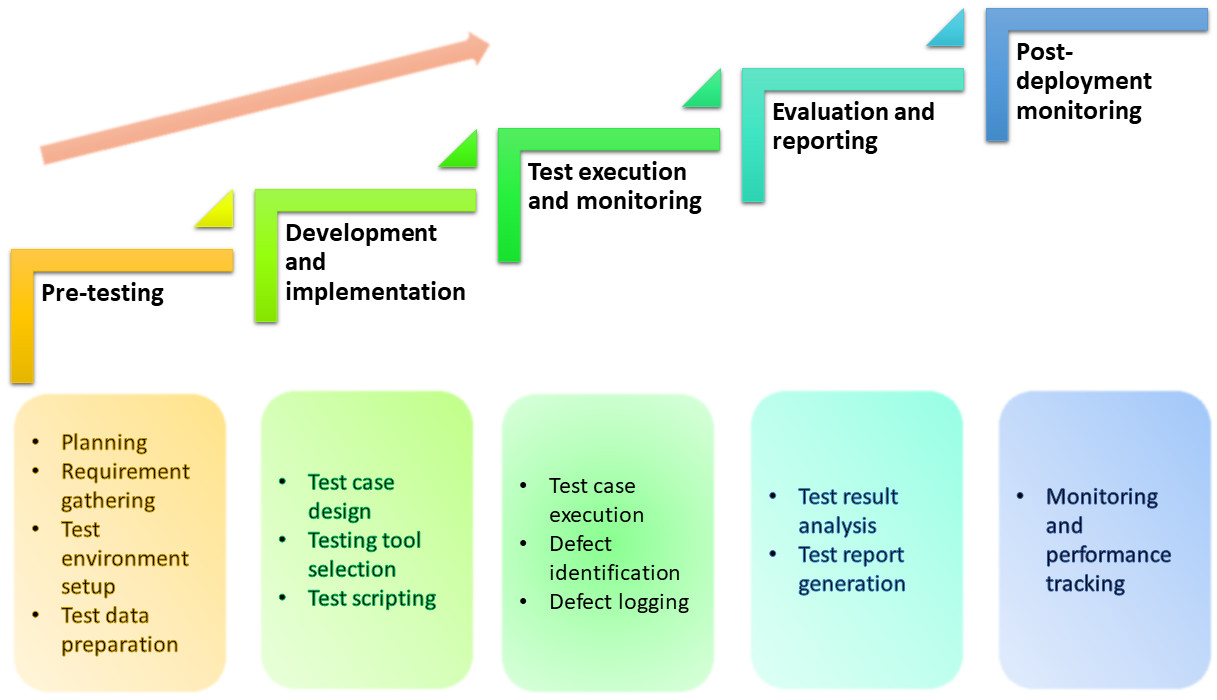

Stages in big data testing

Types of big data testing

You’ll see the following types of testing commonly being done in big data testing:

Functional testing

You can consider this form of testing as the cornerstone of big data testing because it verifies if the application performs its intended tasks as expected across the entire big data lifecycle. It includes:

- Data ingestion testing: Checks if data is correctly extracted from various sources and loaded into the system.

- Data processing testing: Validates if data transformation and processing happen according to defined rules and logic.

- Data storage testing: Ensures data is stored reliably and securely in the big data storage system.

- Data output testing: Verifies if processed data is delivered in the desired format (reports, dashboards) to users. Read more about Test Reports and Test Logs.

Performance testing

Crucial for big data applications due to massive datasets, performance testing focuses on how the application behaves under various loads. This is assessed through:

- Load testing: Simulates real-world data volumes to assess processing speed, response times, and scalability.

- Stress testing: Pushes the application beyond normal loads to identify bottlenecks and ensure it can handle peak usage scenarios.

- Volume testing: Evaluates how the application performs with increasing data sizes to ensure it can keep up with data growth.

Data quality testing

Given big data often comes from diverse sources and can be messy, data quality testing focuses on the integrity of the data throughout the pipeline. This assessment happens through:

- Accuracy testing: Verifies if data is free from errors and reflects real-world values.

- Completeness testing: Ensures all required data elements are present and accounted for.

- Consistency testing: Checks if data adheres to defined formats and standards across different sources and processing stages.

Security testing

Big data security is critical as these systems often handle sensitive information. Security testing identifies vulnerabilities to prevent unauthorized access, data breaches, or manipulation. You can test data security through:

- Vulnerability scanning: Identifies weaknesses in the application and data storage infrastructure.

- Penetration testing: Simulates cyberattacks to assess the application’s ability to withstand real-world security threats.

- Access control testing: Verifies that only authorized users have access to sensitive data within the big data system.

How does big data testing help?

Big data testing can help covert your endeavors of harnessing the insights hidden in big data to transform your business.

- Enhanced data quality: Traditional testing methods might struggle with the sheer volume and variety of big data. Big data testing specifically addresses these challenges, ensuring data accuracy, completeness, and consistency throughout the processing pipeline. This prevents errors from creeping in and allows for more reliable insights based on clean data.

- Improved application performance: Big data applications deal with massive amounts of data flowing in at high velocity. Big data testing helps identify bottlenecks and inefficiencies within the application. By optimizing performance, you can ensure the application can handle these data demands efficiently, leading to faster processing times and quicker results.

- Trustworthy results and insights: Testing verifies the application’s functionality and the underlying data quality. This builds confidence in the results and insights generated from big data analysis. You can make crucial decisions based on reliable data, reducing the risk of basing strategies on misleading or inaccurate information.

- Reduced risks and costs: Big data testing acts as a proactive measure. By identifying potential issues early in the development process, it helps prevent problems from surfacing later in production. This reduces the risk of errors, system crashes, and costly downtime associated with fixing issues in a deployed application. Read more about Risk-based Testing.

- Increased ROI from big data investments: Big data initiatives can be significant investments. Through big data testing, you can ensure that these investments are worthwhile. If big data applications function correctly and deliver valuable insights, organizations can maximize the return on investment (ROI) from their big data endeavors. Read: How to Get The Best ROI in Test Automation.

Challenges in big data testing

Working with so much data can be a challenge, not just due to the sheer volume of data. Here is a list of these challenges.

- Volume of data: One of the most significant challenges is the sheer volume of data. Testing systems need to handle extremely large datasets, making traditional testing methods inadequate. This requires specialized tools and techniques to efficiently manage and manipulate vast amounts of data.

- Variety of data: Big data comes in various forms—structured, unstructured, and semi-structured. Each type of data may require different tools and approaches for effective testing. Handling diverse data types and ensuring their quality and integrity can be complex and resource-intensive.

- Velocity of data: The high speed at which data flows into systems, often in real-time, adds to the complexity of testing. Ensuring the system can process this incoming data quickly and accurately, without bottlenecks, is a significant challenge.

- Complexity of data transformations: Big data applications often involve complex transformations and processing logic. Testing these transformations for accuracy and efficiency, especially when they involve multiple nested operations, can be difficult.

- Scalability and infrastructure: Testing needs to ensure that the system can scale up or down based on the demand. This scalability testing is crucial to handle growth in data volume and concurrent users, which often requires robust infrastructure and can be costly to simulate and test. Read in detail about Test Scalability.

- Integration testing: Big data systems frequently interact with numerous other systems and components. Ensuring that all integrations work seamlessly and that data flows correctly between these components without loss or corruption is a formidable task. Read: Integration Testing vs End-to-End Testing.

- Data privacy and security: With stringent data protection laws like GDPR and HIPAA, testing must also ensure that the system adheres to legal compliance regarding data security and privacy. This involves testing for data breaches, unauthorized access, and ensuring that all data handling complies with relevant regulations.

- Lack of expertise: There is often a shortage of skilled professionals who understand both big data technologies and testing methodologies. This lack of expertise can hinder the development and implementation of effective big data testing strategies.

- Tool and environment availability: Finding the right tools that can handle big data testing requirements is often a challenge. Moreover, setting up a test environment that mirrors the production scale for accurate testing can be resource-intensive and expensive.

- Ensuring data quality: Maintaining high data quality standards across different datasets and ensuring consistency, accuracy, and completeness of data during and after transformations are critical and challenging.

Strategies for big data testing

The following strategies will help you be thorough with big data testing.

Planning and design strategies

- Define testing goals: Clearly define what you want to achieve with big data testing. Are you focusing on data quality, application functionality, or performance? Having clear goals helps create targeted test plans.

- Understand the data: Gain a thorough understanding of the data you’re working with, including its source, format, and business meaning. This knowledge is essential for designing relevant test scenarios.

- Prioritize testing: Given the vast amount of data, prioritize what needs to be tested first. Focus on critical data flows and functionalities that have the biggest impact on business goals. Read: Which Tests Should I Automate First?

- Choose the right tools: Select big data testing tools that cater to the specific needs of your project and data environment. Consider tools for data subsetting (using a smaller portion for testing), data anonymization (removing sensitive information), and test automation. Read: Top 7 Automation Testing Tools to Consider.

Test execution strategies

- Leverage test automation: Big data volumes necessitate automation. Invest in automating repetitive test cases to improve efficiency and test coverage.

- Focus on data quality: Ensure the accuracy, completeness, and consistency of data throughout the big data pipeline. Utilize data quality testing tools to identify and rectify data issues early on.

- Performance testing is key: Big data applications need to handle massive data loads efficiently. Conduct performance testing to identify bottlenecks and ensure the application scales effectively with increasing data volumes.

- Security testing is crucial: Big data often contains sensitive information. Implement security testing measures to safeguard test data environments and prevent unauthorized access or breaches.

Collaboration and communication strategies

- Bridge the gap: Foster good communication and collaboration between development, testing, and data science teams. Everyone involved should understand the testing goals and big data environment.

- Seek expertise: Consider involving big data specialists in the testing process. Their knowledge of the data and big data technologies can be invaluable for designing effective test cases.

- Continuous improvement: Big data testing is an ongoing process. Regularly review and refine your testing strategy based on project progress and evolving data landscapes. Read more about What is Continuous Testing?

Here are some more strategies that will help you manage big data testing:

- Utilize data subsetting and anonymization: To manage the scale of testing, consider using a smaller representative sample of the data (subsetting) or removing sensitive information (anonymization).

- Test in a staged approach: Break down testing into smaller stages, focusing on specific functionalities or data flows at a time. This can help identify and address issues early in the development process.

- Stay updated on big data testing trends: The big data testing landscape is constantly evolving. Stay updated on new tools, techniques, and best practices to ensure your testing approach remains effective.

How testRigor can help?

testRigor is an intelligent, generative AI-powered codeless test automation tool that lets you write test cases in plain English or any other natural language. It enables you to test web, mobile (hybrid, native), API, and desktop apps with minimum effort and maintenance.

Here are some features and benefits of testRigor that might help:

- Quick Test Creation: Create tests using testRigor’s generative AI feature; just provide the test case title/description, and testRigor’s generative AI engine will automatically generate most of the test steps. Tweak a bit, and the plain English (or any other natural language) automated test cases will be ready to run.

- Almost Zero Test Maintenance: There is no maintenance nightmare because there is no reliance on implementation details. This lack of XPath and CSS dependency ensures ultra-stable tests that are easy to maintain.

- Test LLMs and AI: You can use testRigor to analyze and test real-time user sentiments and act based on that information immediately, test your LLMs (Large Language Models) and AI using testRigor’s intelligence.

- Database Testing: Execute database queries and validate the results fetched.

- Global Variables and Data Sets: You can import data from external files or create your own global variables and data sets in testRigor to use them in data-driven testing.

- Shift Left Testing: Leverage the power and advantages of shift left testing with testRigor. Create test cases early, even before engineers start working on code using Specification Driven Development (SDD).

Conclusion

Big data testing is not just about validating functionality; it’s about unlocking the true potential of big data. It empowers organizations to harness the power of information and make data-driven decisions that lead to success. As your big data journey continues, remember that big data testing is an ongoing process. By continuously refining your testing strategy and embracing new tools and techniques, you can ensure your big data initiatives deliver long-term value.

Additional resources

- Different Software Testing Types

- Functional Testing Types: An In-Depth Look

- Continuous Integration and Testing: Best Practices

- How to do End-to-end Testing with testRigor

- More Efficient Way to Do QA

- Top 10 Challenges in Automation Testing and their Solutions

Frequently Asked Questions (FAQs)

Big data testing is crucial because it ensures the integrity and quality of data, which is fundamental for making accurate business decisions. It also helps identify system bottlenecks, ensure high performance and scalability, and maintain data security.

The main components of big data testing include data staging validation, business logic validation, output validation, performance testing, and integration testing.

Big data testing deals with larger volumes of data, more complex data structures, and the need to process data at a higher velocity. Traditional data testing typically handles smaller, structured datasets with less emphasis on real-time processing.

Data security in big data testing involves implementing robust security protocols, conducting security vulnerability tests, and ensuring compliance with data protection regulations. Security testing tools and methods are used to detect potential security flaws and prevent data breaches.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |