Learning Software Application Testing: A Guide

|

|

“Testing is an infinite process of comparing the invisible to the ambiguous in order to avoid the unthinkable happening to the anonymous“— James Bach.

Let’s start with a straightforward definition: software application testing is a process of verifying and validating that a software application works as intended and there are no errors in the functionality. Read here – What is software testing?

This article will help you understand the nitty-gritty aspects of software application testing with examples. So let’s go!

Why is Software Testing Important?

Testing is essential because it helps identify defects or issues in the software early in the development process. Early detection reduces the cost of fixing bugs later in the cycle, enhances the software’s reliability, and ensures it meets user expectations.

Types of Software Testing

Functional Testing

Functional testing evaluates the functional aspects of a software application, ensuring that it performs its intended functions correctly according to specified requirements. This type of testing checks if the software application behaves as expected when given specific inputs.

Types and Examples of Functional Testing

Unit Testing

- Objective: To test individual units(methods or functions) or software components in isolation. Developers typically do it during the coding phase.

- Example: Test a Login function or method to ensure it produces the expected output for given inputs.

Integration Testing

- Objective: To verify the interactions between integrated units or components.

- Example: Test how an e-commerce application’s Create Order module successfully communicates with Payment services.

System Testing

- Objective: To assess the entire software system as a whole and test the system’s functionality and interactions between its components.

- Example: Test the entire e-commerce platform to ensure that all features like product browsing, cart management, and payment processing work together seamlessly.

Acceptance Testing

- Objective: To determine if the software meets the specified acceptance criteria and is ready for deployment. End-users or stakeholders usually perform it.

- Example: End-users test an e-learning platform to ensure it meets their educational needs, navigation, intuitiveness, and usability expectations.

Smoke Testing

- Objective: To perform a quick, preliminary check to see if the software is stable enough for more in-depth testing. A subset of test cases covering the most critical functionality after an initial/unstable build.

- Example: Test if a web application’s homepage loads and essential links work after an initial build.

Sanity Testing

- Objective: To verify that specific functionalities or code changes are working as expected requires focused testing to check only the areas affected by recent code changes.

- Example: After fixing a bug related to user authentication, test only the login functionality.

Regression Testing

- Objective: To ensure that new changes or enhancements have not introduced defects or impacted existing functionality. Re-running previously executed test cases and including new ones for the modified code.

- Example: After adding Credit Card Payment features to a mobile app, run all existing payment-related test cases to ensure they still pass.

User Interface (UI) Testing

- Objective: To evaluate the user interface for consistency, usability, and adherence to design guidelines. It involves checking the visual aspects and user interactions of the software.

- Example: Ensure that all buttons, menus, and forms in a web application are displayed correctly and function as expected.

Compatibility Testing

- Objective: To verify that the software works correctly on different devices, browsers, and operating systems while testing on various configurations to ensure broad compatibility.

- Example: Check a website’s performance and functionality on Chrome, Firefox, and Safari browsers.

Non-Functional Testing

Non-functional testing evaluates aspects of a software application beyond its core functionality. It assesses performance, security, usability, reliability, and scalability. It aims to measure how well the software meets user expectations and how it performs under various conditions.

Types and Examples of Non-Functional Testing

Performance Testing: Performance testing assesses the application’s responsiveness, speed, and scalability. A software application’s performance can be tested through:

- Load Testing: Determines how the application performs under heavy loads. For instance, testing how many users can simultaneously use a website before it becomes slow or unresponsive.

- Stress Testing: Pushes the system beyond its limits to evaluate its behavior under extreme conditions. For example, testing if a web server can handle a sudden spike in traffic.

Security Testing: Security testing identifies vulnerabilities and ensures the software application is protected against unauthorized access and data breaches. Example tests include:

- Penetration Testing: Attempts to breach the application’s security to find weaknesses that hackers could exploit.

- Authentication Testing: Ensures that the authentication mechanisms, such as username and password validation, work securely and as intended.

Usability Testing: Usability testing evaluates the user-friendliness of the application. Assess factors like user interface design, ease of navigation, and overall user experience. Check if users can easily find essential features and complete everyday tasks without confusion.

Reliability Testing: Reliability testing checks how stable and dependable the software is over time. It may involve:

- Testing for memory leaks or crashes that could occur during prolonged use.

- Assessing how the application handles long-term data storage without corruption.

Scalability Testing: Scalability testing determines how well the application can handle increased or decreased load or growth(scale up and down) in the user base. For example, testing whether a web application can accommodate more users as the business expands.

Use Case of Software Application Testing

E-commerce Website Testing

Consider a company developing an e-commerce website to sell products online. Before launching the website to the public, they need to thoroughly test it to ensure it works as expected and provides a smooth shopping experience for customers. Here’s how software testing might be applied in this scenario:

- Functional Testing: This involves checking if all the website’s functions work correctly. Verify that customers can browse products, add items to their cart, proceed to checkout, make payments, and receive order confirmations without encountering any errors. For instance, ensure that the “Add to Cart” button adds the correct product and accurately calculates the total cost.

- Performance Testing: Simulate different scenarios, such as multiple users shopping simultaneously, to assess the website’s response times and scalability. Helps to identify and fix issues like slow page loading times.

- Security Testing: Check the website for vulnerabilities and ensuring that customer data is secure. Attempt to hack into the system or exploit security weaknesses to assess how well the website protects user information.

- Usability Testing: Assess whether customers can easily navigate the site, find products, and complete transactions. Identify issues like confusing layouts, broken links, or unclear instructions and provide recommendations for improvement.

- Compatibility Testing: Verify that the website functions as expected on different platforms, such as desktop computers, smartphones, and tablets. Check compatibility with Chrome, Firefox, Safari, and Edge.

- Regression Testing: Ensure that new features or bug fixes haven’t introduced new issues or affected existing functionality. For example, if a bug were fixed in the order cancellation process, verify that this fix didn’t cause problems in order creation or return process.

- Load Testing: Simulate many users accessing the site simultaneously to determine if it can handle the load without crashing or experiencing significant slowdowns.

- User Acceptance Testing (UAT): UAT involves real users or stakeholders confirm that the website meets their expectations and fulfills the business requirements. Any issues or suggestions are documented and addressed before the final release.

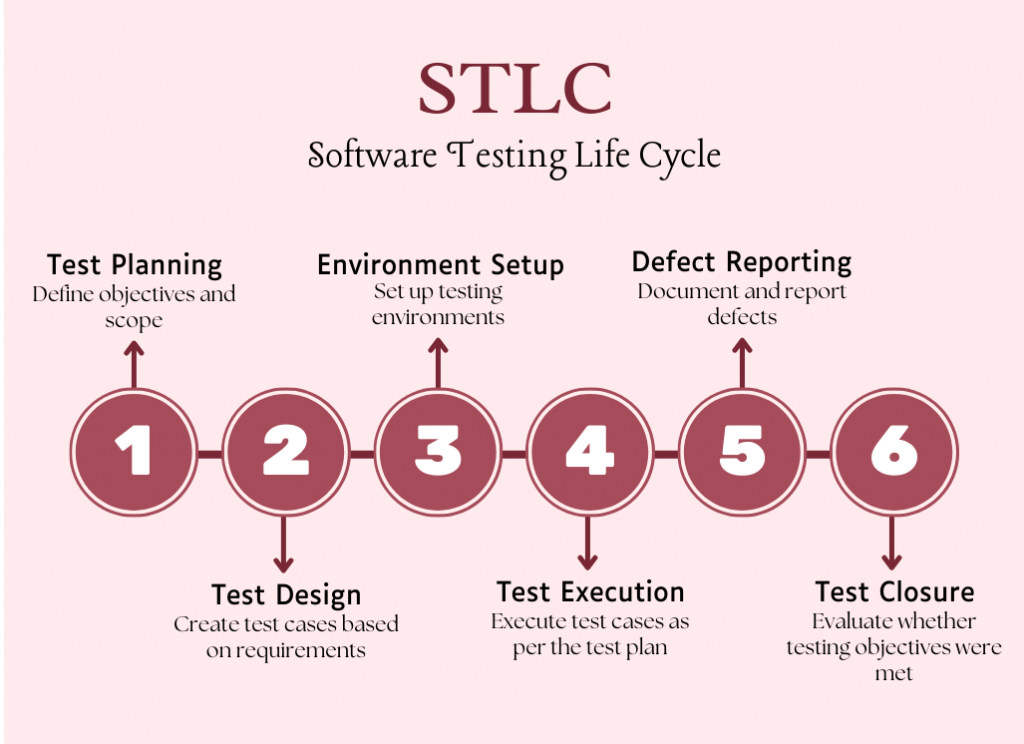

Software Testing Life Cycle

Test Planning

- Define objectives and scope.

- Identify test resources, including personnel and tools.

- Develop a test plan outlining the testing strategy. Know more about test plan vs. test strategy.

You can access test plan and test strategy templates for your testing process.

Test Design

- Create test cases based on requirements.

- Determine test data and test environment requirements.

- Develop the test scripts manually or write automation scripts using programming languages.

With the intelligent AI-powered tool testRigor, you can create test scripts automatically in plain English within seconds. You only need to provide the test case title, and testRigor’s generative AI engine will take care of the rest. Know more here.

With testRigor, you can also write your test cases in plain English without any CSS/XPath locators and create ultra-stable test cases with the text you see on the UI.

type "Shirt" click on "Search" select 1st option from "Select Shirt" check that page contains "XL"

Environment Setup

- Install the necessary software, servers, hardware components, establishing the network infrastructure.

- Setting up testing environments (e.g., development, staging, production)

- Procure essential software tools required for the application testing process.

Test Execution

- Execute test cases as per the test plan.

- Record and report test results.

- Monitor test progress through CI/CD pipelines and make adjustments if necessary.

Defect Reporting and Management

- Document and report defects using a defect tracking system.

- Prioritize defects based on severity and impact. Learn here about defect priority and severity and their differences.

- Work with developers to resolve issues.

Test Closure

- Evaluate whether testing objectives were met.

- Generate test summary reports.

- Perform a post-mortem to identify areas for improvement.

Here is a guide on the software application testing lifecycle.

Testing Methodologies and Techniques

Waterfall vs. Agile Testing

Waterfall Testing Methodology: Testing is typically conducted linearly and sequentially, following the phases of software development step by step. Each stage, including requirements, design, coding, testing, and deployment, is completed before moving to the next.

Testing in Waterfall is often seen as a distinct phase after development. It’s rigorous and thorough, but changes are challenging to accommodate once the project has progressed beyond the initial stages.

Agile Testing Methodology: It is an iterative and collaborative approach where testing is integrated throughout the development cycle. Agile teams work in short iterations, delivering small increments of working software. Testing in Agile is continuous and adaptive, with testers collaborating closely with developers and stakeholders.

This approach allows flexibility and faster feedback, enabling the team to respond quickly to changing requirements and produce a more adaptable and customer-centric product.

Black Box vs. White Box Testing

Black Box Testing: Testers assess the software’s functionality without knowing its internal code or structure. They focus on inputs and expected outputs, checking if the software performs as expected based on its specifications. Black box testing is user-centric and emphasizes end-user experience.

White Box Testing: It involves examining the internal code and logic of the software. Testers know the code’s structure and use this information to design test cases that assess the software’s inner workings, including code coverage and control flow. White box testing is developer-centric and aims to ensure code correctness and robustness.

Get a holistic view of black, white, and gray box testing.

Manual vs. Automated Testing

Manual Testing: Human testers execute test cases manually, interacting with the software as end-users would. They observe and evaluate the software’s behavior, identifying defects and verifying its functionality. Manual testing is effective for exploratory testing, usability assessment, and scenarios where frequent changes occur. Here is a beginner’s guide to manual testing.

Automation Testing: Involves using specialized tools and scripts to automate test case execution. It benefits repetitive and regression testing, allowing faster and more consistent test execution. Automation helps catch regressions early and ensures software stability, but it requires initial setup and maintenance. Find the differences between manual and automation software testing.

Here’s an informative article about all testing methodologies to propel your QA process.

Common Testing Challenges and Solutions

Handling Changing Requirements

- Challenge: Requirements for software projects can change frequently, leading to test cases needing to be updated and relevant. These updates can result in wasted effort, missed defects, and delays in testing.

- Solution: Communicate openly with stakeholders to stay informed about changing requirements. Agile methodologies encourage continuous collaboration, making it easier to adapt to changes quickly.

Regression Testing

- Challenge: As software evolves with new features or fixes, it’s crucial to ensure that existing functionality remains intact. Manual regression testing can be time-consuming and error-prone, particularly in complex applications.

- Solution: Test automation is a valuable tool for addressing this challenge. Automated tests can be run efficiently to check existing functionality after each code change, reducing the burden of manual regression testing.

Testing in Agile and DevOps Environments

- Challenge: Agile and DevOps methodologies promote rapid development and frequent releases, which can create time constraints for testing. Continuous integration and continuous delivery (CI/CD) pipelines require automated testing to keep pace.

- Solution: Agile and DevOps teams must integrate testing early in the development cycle. Test automation, parallel testing, and containerization tools streamline testing within these fast-paced environments.

testRigor allows parallel, cross-browser, and cross-platform testing and integrates quickly with all leading CI/CD tools to solve your Agile and DevOps-related testing challenges.

Test Data Management Challenges

- Challenge: Acquiring and managing appropriate test data can be challenging, especially for complex applications with various data scenarios. Privacy regulations like GDPR can restrict the use of actual user data in testing.

- Solution: Use data anonymization or generation tools to create realistic test data compliant with data protection laws. Additionally, establish clear data management procedures and maintain separate test environments with representative data sets.

Use testRigor to generate data based on detected field type (supports about 50 types). You can generate random data conforming to a specific format every time the test runs and also provide your test data. Here are the tips and tricks for using data sets in test automation.

Maintaining Test Automation Scripts

- Challenge: Automated test scripts require maintenance to adapt to changes in the application’s UI, functionality, or underlying technology stack. Without proper upkeep, automation scripts can become obsolete.

- Solution: Allocate time for regular script maintenance. Testers should review and update scripts as necessary, ensuring they remain aligned with application changes. Version control systems can help track changes and collaboration.

Use testRigor’s self-healing capabilities, forget test maintenance, and use the saved time and effort in refining your testing game. testRigor automatically detects the changes and incorporates them in your test scripts whenever there is any change in the element attributes or application.

testRigor’s top features powering the web, mobile, and desktop testing solve your testing challenges quickly within budget.

The Future of Software Testing

Artificial Intelligence in testing has arrived, and it will stay here. The reasons are many, and the major ones are ease of use, quick test creation, faster execution, less maintenance efforts, and excellent test coverage. All of these result in cost reduction with less effort. AI-powered tools like testRigor enable manual testers, business analysts, sales teams, and everyone on your team to be automation experts without any learning curve.

Generative AI, Natural Language Processing (NLP), and Machine Learning all these latest technologies are refining themselves daily and shaping the QA paradigm effortlessly.

Conclusion

In the ever-evolving world of software application testing, the diversity of testing types and the persistent challenges testers face underscore the critical role played in delivering quality software. The future promises more automation, AI-driven testing, and seamless integration within agile and DevOps ecosystems. However, the core principles of thoroughness, adaptability, and effective communication will remain timeless.

Software application testing will continue to evolve, keeping pace with the dynamic technology landscape. Intelligent test automation tools such as testRigor empower the whole team to deliver reliable and robust software to users worldwide.