Generative AI-Based Testing Certification

AI and Prompt Engineering

Generative AI’s main benefit is its ability to speed up initial test case creation. It facilitates direct AI interaction with a UI based on descriptive prompts. The first and most important prompt is the description of the application to be tested. Descriptions can be provided when the test suite is being created, or they can be added later in Settings->Test generation->AI-based Test Generation. The more detailed the description is, the better chance that generative AI will be able to build successful tests.

Poor description for a banking app:

This is a banking app. It does the stuff a banking app usually does, but this bank is the best. Please be smart and make test cases based on the information that only I know about my application.

Good description for a banking app:

This is a banking application for users on desktop web browsers. Users must be logged in to perform transactions. Users can view balances, inquire about transaction history, perform fund transfers, make bill payments, access financial statements, access credit scores. Users can also apply for loans, view loan status, and use credit or mortgage calculators to estimate mortgage payments, affordability, and refinancing options. In addition, users may also use the platform to browse investment and trading opportunities. It has portfolios and research tools to access market news, analysis, and financial research reports as well as the ability to set up alerts for specific investment opportunities such as price movements, dividend payouts, and other market events. Finally, users, can leverage tools for financial planning to help them set up budgets and goals.

The next step in using generative AI successfully is providing workflows. testRigor can provide generic suggestions for workflows, but user-generated prompts are best. These prompts can be through the test cases description and through smaller segments called AI-based rules. The AI then extracts data from the page using the prompts in an attempt to execute the task autonomously.

Description-only prompts will allow you to do either of the following:

In both cases, the process of test creation is as straightforward as providing a title or description of the test case, and clicking on the respective buttons. If you opt for the sample test, you will receive a series of steps in the correct format, which you can then tailor to your specific needs to create a test case. On the other hand, if you opt to generate an actual test with AI, testRigor will generate a comprehensive test based on your application.

After generating the test, you have the option to modify values, add more assertions, or make any other necessary adjustments. This flexibility allows you to tailor the test case to perfectly suit your specific requirements.

Watch the video for a detailed step-by-step guide on how to use this feature.

AI-based Rules

You can write free-flowing sentences in the “Custom steps” multiline edit box like: “Find and select a Kindle.” testRigor will

If the rule doesn’t produce the sequence of steps you anticipated, there are always two methods to address it:

- Prompt Engineering

- Fixing the steps

Prompt Engineering

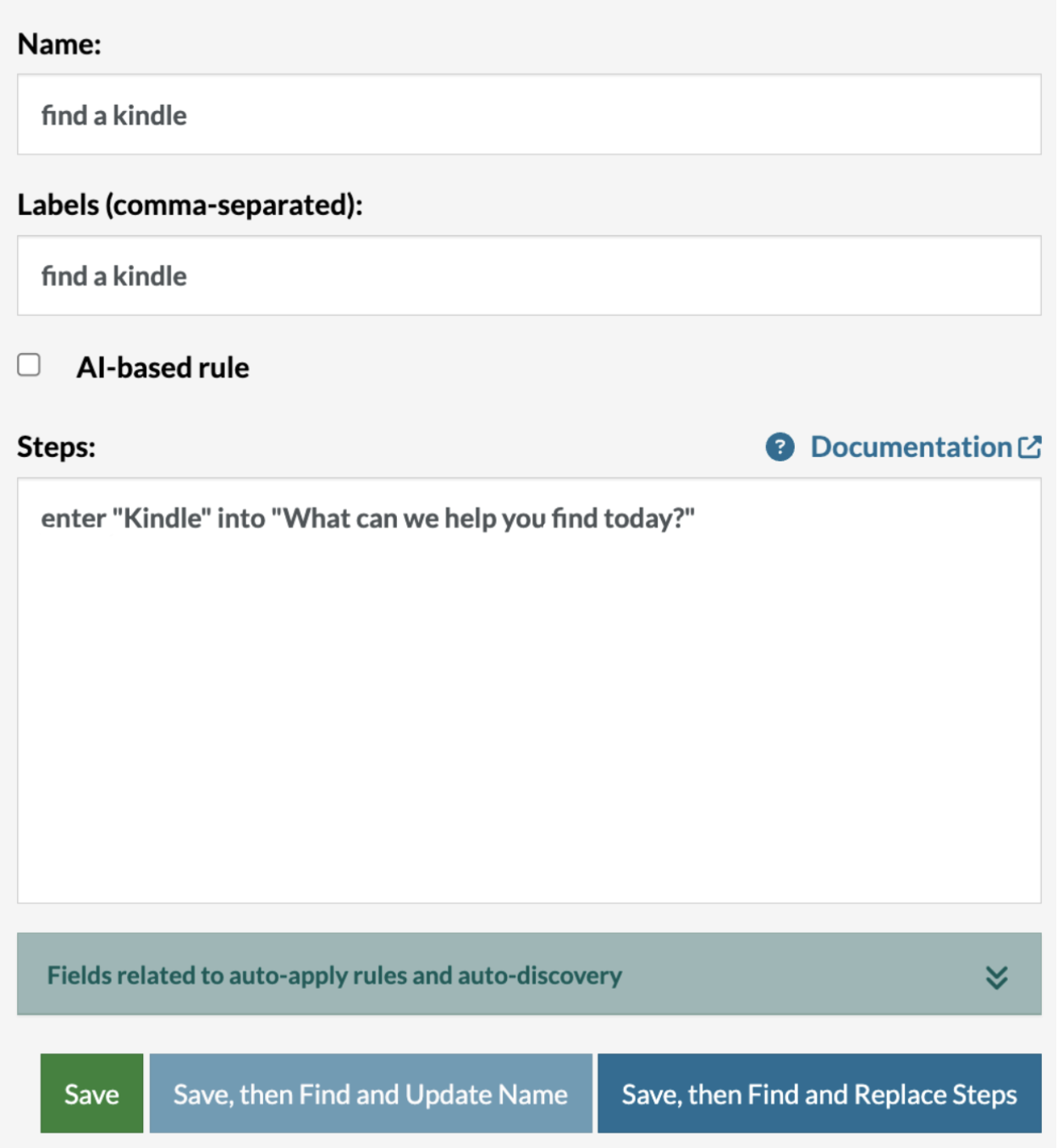

You can modify the name of the rule to better convey what the AI should accomplish and provide more clarity there. From an AI standpoint, the rule’s name acts as a “prompt,” directing its actions. Occasionally, providing more context can help.

find a Kindlefind and select a KindleFixing the Steps

Alternatively, you can directly adjust the steps. Navigate to the Rules section on the left, locate the rule, and deselect the “AI” checkbox, which will then reveal the underlying steps.

In this scenario, you can add a step like “click ‘Kindle Paperwhite'” to ensure progression to the subsequent phase. Generally speaking, you can modify the steps as needed. This action transforms the rule into a regular one, bypassing the AI and strictly following your designated sequence of steps. Still, some instructions you incorporate could be AI rules in their own right.

When to use Prompt Engineering vs. Fixing the Steps

Utilizing AI is advantageous because it can elevate your tests to new levels of stability by amplifying the abstraction level you use for descriptions. For instance, “find and select a Kindle” is indifferent to the intricacies of your search system and the exact steps needed for execution. The trade-off is that AI-based operations may be somewhat slower, and the AI might occasionally misinterpret your intentions unless you provide exhaustive details.

If your objective is to verify that a specific feature operates in a manner detailed in the specification, you might opt for explicit commands. This ensures the test flow aligns perfectly with the specified requirements and will fail if any step falters.

choose a specific KindleThis would allow the AI to autonomously select any of the actual products.

As a guiding principle, employ AI when the details of achieving or performing a step are inconsequential and unlikely to change. Conversely, use specific steps when verifying that a functionality operates in a specific manner or adheres to specific terminology.

Test Case Generation (Corner Cases)

There is an ability to automatically generate corner cases for any given test case.

-

The cases generated will skip actions that enter/type data into fields one by one and find the errors associated with the step that has been skipped.

-

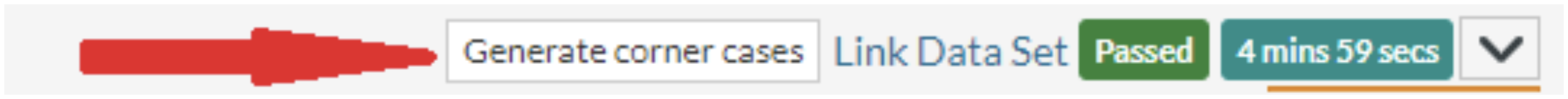

The Generate corner cases button is located on the information bar of each test case on the left of the Link data set link.

- Cases that have been generated will not have the option to generate other corner cases. They will have a label or text indicating that they have been generated as corner cases instead.