Is AI Slowing Down Test Automation? – Here’s How to Fix It

|

|

These days, we are witnessing the presence of AI everywhere. Software testing is no exception. From figuring out what to test to automating said test cases, experienced engineers are starting to rely on AI tools.

By popular opinion, you’d expect AI to make software development and testing a smooth-sailing journey – you, the master, telling your AI tool to do things for you. But here’s the interesting twist in the story:

AI + Traditional Test Automation Framework = Slower Test Automation

Strange, you’d wonder?

Well, not really.

Let’s learn why, while also learning the best way to leverage AI in test automation.

AI Slowed Down Automation Efforts??

A recent study by METR showed some baffling findings: AI slows down software development in real-world complex scenarios by 19%.

The study was done with a group of experienced open-source developers who were recorded over a stipulated period of time as they performed different SDLC tasks. Some fascinating discoveries that came up were:

- Despite the results (a 19% slowdown), developers still believed that they were being more productive. A clear gap between perception and reality.

- Some participants cited that they were willing to trade speed of execution for the ease of use of AI. They found it cognitively easier to interact with AI (like LLMs).

- The faith in AI suggestions and its content was strong even though participants accepted less than half of AI’s suggestions. Most spent more time cleaning up AI’s output.

While this study doesn’t suggest stopping using AI or that there aren’t better ways to use it, the key message it puts forth is that people think that patching anything up with AI will make the process faster and more efficient.

What Does This Mean for Test Automation?

How you use AI for test automation makes all the difference. But before we break that down, let’s first align on what we mean by a traditional test automation.

This is What a Traditional Test Automation Looks Like…

By traditional test automation, we are referring to those test automation frameworks that require you to:

- Write automation test scripts using code.

- Testers or automation engineers with programming skills work with such frameworks (like Selenium, Appium, Cypress, Playwright).

- These automated test scripts are derived from a series of detailed and pre-defined manual test cases.

- Often incur heavy maintenance costs due to infrastructure and test script maintenance.

- They tend to rely heavily on the implementation details (like the DOM structure) of UI elements of the application under test, making them brittle and prone to failure.

The Question to Ask Yourself – How are You Using AI for Test Automation?

Many of these traditional tools are being paired with AI add-ons. As per the study’s findings, this might seem like a good idea, but is actually slowing you down.

Let’s understand this a bit better through the scenarios below.

When AI is Added to Traditional Test Automation for Test Generation

If you’re using an AI tool like an LLM in addition to your test automation framework to write test cases and scripts that you can directly run as a part of your test automation suite, there are going to be a few problems.

- Your first and foremost hurdle is going to be generating a prompt that can communicate the entire length and breadth of your requirement. The art of prompt engineering isn’t as easy as it seems.

- While AI can give you a boilerplate code, it cannot create fully functional test scripts that are ready to run. That kind of contextual understanding is hard to get with just an AI add-on tool.

- Even if your tool does give you a test script code, you’ll end up spending more time making it suitable for execution within your test automation framework. An interesting example of this is if you want to run the same test across platforms like the web and mobile, which tends to be the case for most modern applications. The AI add-on might give you a coded script, but you’ll still have to adjust it according to your test automation framework’s syntax (import dependencies, classes, etc.) and also make changes to the code logic itself based on the application, coding standards followed by you, and the context you have of the application.

Based on the research, senior engineers are more productive without AI, unlike their own assumption. This comes down to AI overhead, where you need to review what AI has done and make corrections/rewrites as needed.

When AI is Added to Traditional Test Automation for Test Maintenance

In theory, AI might seem like the ultimate ghostbuster of test maintenance, but in practice, it may not be that straightforward. Especially if you’re using a patch-up solution with your traditional test automation framework. Here’s why:

- UI elements (like buttons, fields, etc.) are the basis of end-to-end or UI testing. You interact with them and verify different operations based on their behavior. Traditional testing frameworks tend to rely on the implementation (or code-level) details of these UI elements, like XPaths or CSS selectors. However, the problem with that is that modern websites are dynamic in nature, meaning that the code behind these elements keeps updating. What’s called a “login button” today might be changed to “signup” tomorrow. So what can AI do here? It can help you find better UI element locators, which, again, might be those code-level details. So, will you use your AI tool to identify new locators for the same UI element every time the test fails due to changing implementation details? Doesn’t seem to handle the actual problem – reliance on code-level details.

- Let’s say you’ve used a tool that supports self-healing of test cases. This means that it can identify the failures and give you alternative fixes to make the script work. However, it doesn’t always work well because the AI doesn’t have your contextual awareness of the project and test case. If it goes on a “self-healing rampage”, you’d end up with more maintenance work. So again, like the above study showed, you’re less likely to rely on AI’s suggestions for fixing what’s broken.

Test automation maintenance can consume up to 100% of the QA team’s time. The key players for this unwanted cost are flaky tests, brittle element locators, and skill gap (coding expertise). This is by far the biggest time sink and the primary reason why most companies don’t have 90%+ of end-to-end tests automated.

The Final Verdict – Is AI Slowing Down Test Automation?

Well, the answer is Yes and No …

Yes, when you try to shove AI into existing test automation frameworks. This is because, at the heart of it, you’ve still not addressed the problems that these traditional test automation frameworks like Selenium and Playwright face.

No, if you smartly leverage AI for what it’s good at.

Here’s how …

How To Use AI’s Strengths For Better Test Automation?

To make the most of AI in test automation, you need to leverage AI’s strengths. Look at AI agents. Unlike a patched-up solution where the AI part is trying to compensate for the shortcomings of the underlying framework, which is a fundamental design mismatch, still code-dependent, increased complexity, integration overhead, limited scope of the AI add-on, and a reactive approach, AI agents are holistic solutions.

testRigor‘s “AI agents” are not separate plugins but the very engine that drives all test creation, execution, and maintenance. The AI is deeply integrated into how elements are identified, how actions are performed, and how tests adapt. It leverages the AI agent concept to move from “how to click a button” (technical implementation details that tools like Selenium focus on) to “what to do” (when AI is building a test case).

This shift makes automation faster to create, significantly more stable, and accessible to a much wider range of team members, directly addressing the biggest pain points of traditional, code-based test automation.

Here’s how testRigor helps with holistic improvements in test automation.

- Ability to Create Test Scripts without Depending on Coding Skills: A huge feature that AI agents like testRigor offer is the ability to understand human instructions without programming languages. The tool does not act like a programming language interpreter. This means that testRigor uses AI (Natural Language Processing (NLP) and Generative AI) to understand and automate test cases that you write in plain English, or natural language. This helps in the following ways:

- You can make QA a shared responsibility, as it should be. Quite often, the ones with the most product knowledge and user behavior information are not adept at coding. If such members want to participate in test automation, they end up spending a lot of their time in back-and-forth with automation engineers. This again adds to the unnecessary loss of productivity and time for all involved. So when your tool understands the regular English language, these team members (like product owners and manual testers) can directly write, edit, and review automated tests.

- Apart from writing test scripts in English, testRigor simplifies the process even further. The tool can create functioning test cases based on a comprehensive description of the application it is supposed to test. This is a good starting point, say, for dealing with new features under development (BDD approach) and for documenting every important area to be tested. Read more about the other simplistic ways of creating test cases with testRigor over here – All-Inclusive Guide to Test Case Creation in testRigor.

- Understanding and Adaptation: AI agents that use AI vision and NLP can “understand” an application’s UI much like a human does. They don’t just look for hardcoded technical locators; they interpret visual cues, text labels, and the overall context of a UI element. If you’re using testRigor, you do not need to sweat over XPaths and CSS selectors anymore. Just write how the element appears to you on the screen.

- Adapting to breaking changes in the UI and Negligible Maintenance: When the UI changes, the AI can adjust the test script’s steps to the new changes and present it for user’s review. And because testRigor doesn’t rely on technical locators, there is no need to constantly fix tests when XPath/CSS selectors change, which makes it far easier to deal with.

- Unified Platform for End-to-End Testing: testRigor is built to handle complex end-to-end user workflows spanning through web, mobile, native desktop, and/or mainframes, including interactions with LLMs, CAPTCHAs, chatbots, emails, SMS, 2FA, databases, APIs, and even phone calls, all within the same plain-English scripting paradigm. This avoids the need to stitch together multiple tools and frameworks. With most code-based tools, you’d need separate tools and coding for each of these non-UI interactions, leading to fragmented, complex, and high-maintenance test suites.

- Easy Integrations with Existing Infrastructure: testRigor can easily assimilate into your testing ecosystem as it can integrate with CI/CD platforms, databases, and various test management tools too. You can even import existing test cases from tools like TestRail or XRay and then automate them within testRigor.

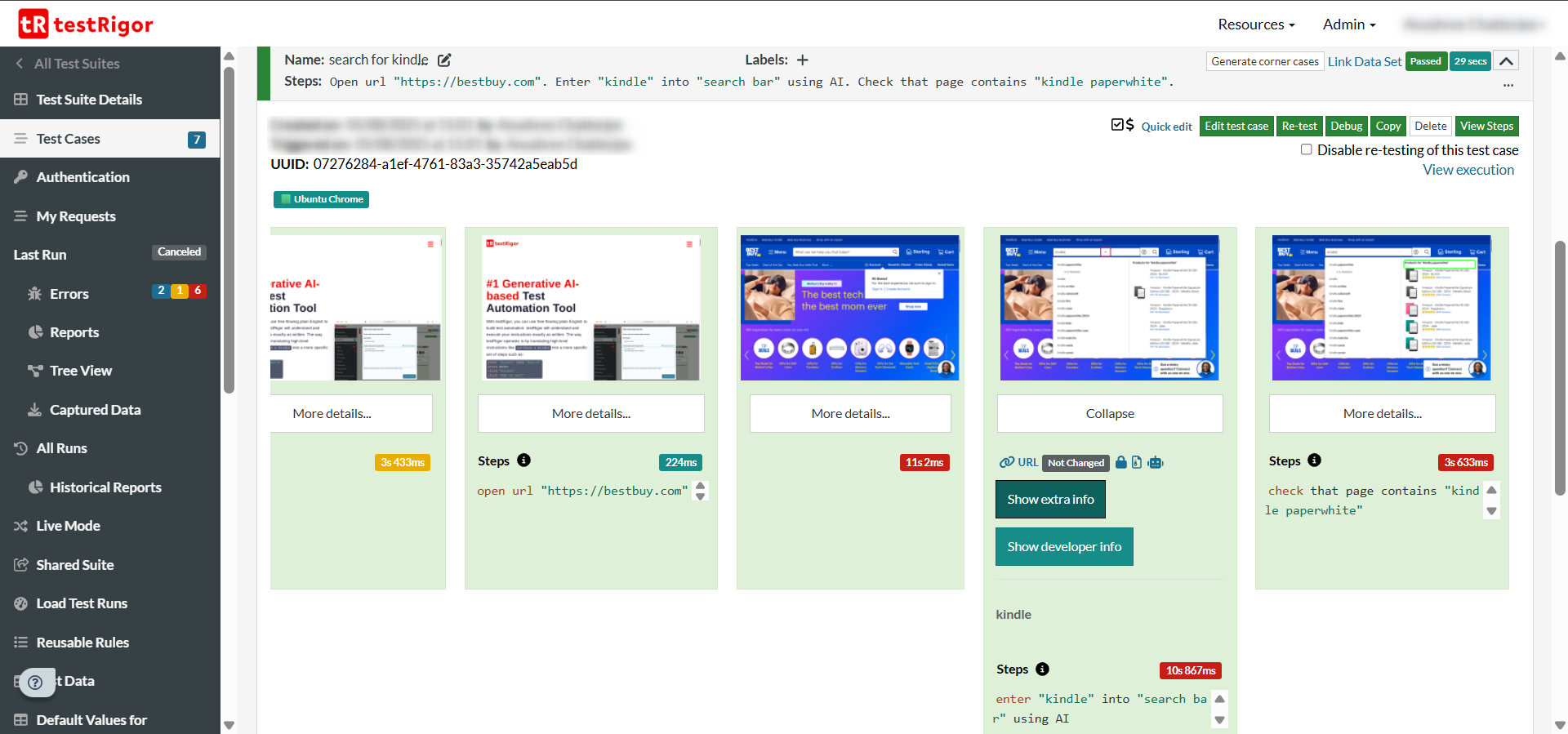

See For Yourself – An Example of Simplistic Test Automation with AI

enter "kindle" into "search bar" check that page contains "kindle paperwhite"

That’s it! Simple, plain English statements describing test cases step by step, like you’d do in a manual test case. The same test case would comprise lines of code if it were in Selenium. The best part here is that you don’t have to mention anything about the exact DOM location of the “search bar” or the text “kindle paperwhite” on the Best Buy website. In the future, even if the search bar’s name changes to just “search”, your test will keep executing successfully.

A simple user interface further helps to analyze test results, which also screen captures from each step of the test execution.

A Success Story

IDT Corporation had a goal of automating 90%+ of its test cases. They started working with Selenium and quickly climbed up to 33-34%. But then, after about a year, as the software started changing, engineers started spending all of their time maintaining the Selenium test cases instead of writing new ones. Results didn’t improve for about 3 years as test maintenance kept wearing them down.

After a lot of research, the company decided to go with testRigor. A big push for them to work with testRigor was when they saw that some of their interns, who had no prior coding background, were able to automate test cases using testRigor. Once they moved to testRigor, they went from 34% automation to 91% automation in 9 months.

With testRigor, they were able to:

- Achieve their test coverage goals – 90% test coverage

- Automate tests that could work on applications using Android, iOS, Angular, React, React Native, Flutter, etc.

- Enable every manual QA on the team to build twice as many tests as QA Engineers previously did, while still performing their manual QA work. This brought down the previous number of QA Engineers needed for UI testing from 16 to just 2 for the project

- Spend less than 0.1% of their time on test maintenance

- 90% reduction in bugs

Read the full story over here – How IDT Corporation went from 34% automation to 91% automation in 9 months.

Summing it up

Learning: Leverage AI wisely for test automation by utilizing its strengths.

If you’re trying to naively combine AI with your traditional test automation solution, you’ll end up not only wasting resources and slowing down the development of tests, but also draining your resources on test maintenance of some generated code. Today, the intelligence of AI agents can help you achieve your goals in a sustainable way instead. They can massively cut down test creation and maintenance costs.

| Achieve More Than 90% Test Automation | |

| Step by Step Walkthroughs and Help | |

| 14 Day Free Trial, Cancel Anytime |